cmd/compile: k8s scalability load test failure (latency regression) with unified IR #54593

Labels

compiler/runtime

Issues related to the Go compiler and/or runtime.

FrozenDueToAge

NeedsInvestigation

Someone must examine and confirm this is a valid issue and not a duplicate of an existing one.

Performance

release-blocker

Milestone

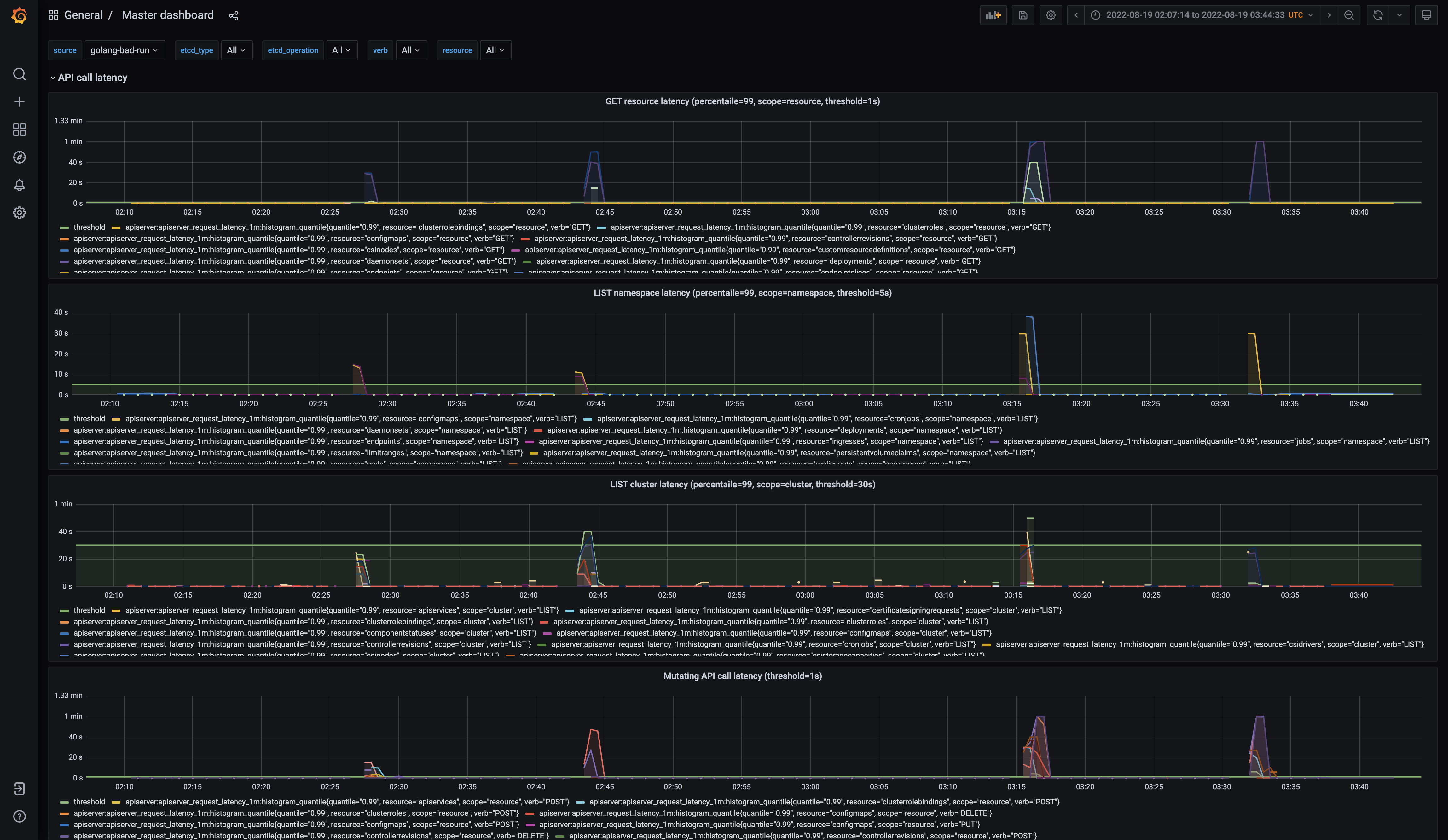

Kubernetes runs a nightly load test built with Go tip. Recently there was a regression in latency that caused various thresholds to be exceeded.

(See the "gathering measurements step," though that on its own isn't very descriptive.)

The team has narrowed the culprit down to

It's unclear at this time what the issue is. We're currently waiting on more metrics to investigate this more thoroughly.

CC @mdempsky

The text was updated successfully, but these errors were encountered: