-

Notifications

You must be signed in to change notification settings - Fork 17.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

go/parser,go/types: memory usage doubled in 1.17 #49035

Comments

|

#45580 indicated there shouldn't be such an increase. |

|

cc @findleyr |

|

CC @griesemer Thanks for reporting, and for the repro. I can investigate. I don't think go/types memory usage more than doubled in 1.17 generally -- we'd have known by now if that were the case. But it seems likely based on this report that there is a significant regression that this repro exercises. #45580 was a best-effort audit of the changes, but certainly could have missed something. |

|

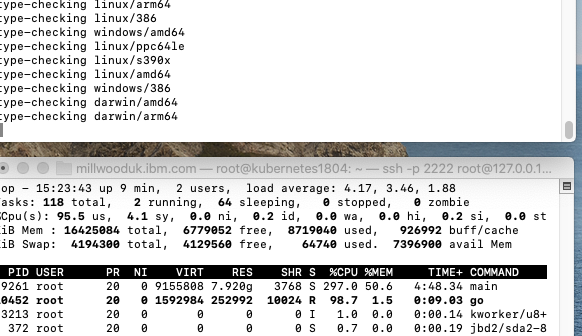

Hello @findleyr Thanks for taking a look at this. I was trying to work out how I could provide more insight into what is going on, and ended up increasing the memory of my VM to 16Gb and using the |

|

Here's what

|

|

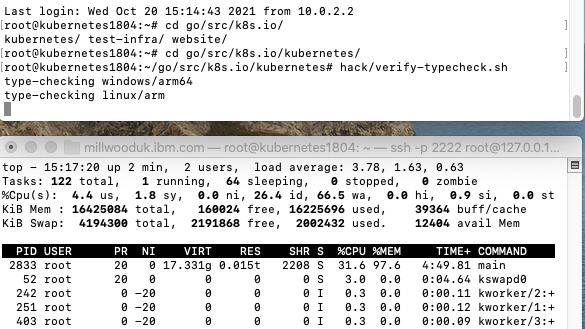

And here's the result for current tip 4e565f7: So sounds like the cc @findleyr |

|

Thanks for looking into this @danmillwood and @cuonglm! I confess I haven't gotten to debugging this yet. The sanitizer sounds like a highly likely culprit. It will produce a lot of garbage, which in the context of something like gopls' steady-state memory might not be noticeable, but could easily spike the high-water mark in a script. This is probably a big enough regression to fix for the next point release. We can just remove the sanitization pass in 1.17, as it's not necessary for non-generic code, and no longer applies at tip 😞. Before patching, I'll need to confirm that this is the problem. I can do this, but @cuonglm or @danmillwood if you're interested you can try commenting out the sanitization pass here: https://cs.opensource.google/go/go/+/refs/tags/go1.17.2:src/go/types/check.go;l=278;drc=1607c2817241bd141af9331a3e6c3148e5cd5d8b |

|

In the sanitizer, is is the maps used to detect duplicates? Otherwise, w/o generics, no allocations (but perhaps for implicit allocations due to non-careful coding) should happen. Would be good to verify. |

|

@griesemer makes a good point. I assumed it was the map writes, but it would be good to confirm via |

|

@findleyr Here's the result with But even with this patch, running |

|

Sorry for the delay on this; it got pushed back by work toward the 1.18 freeze. This still warrants investigation, and possibly a cherry-picked fix, particularly considering other reports like #49883. I will try to get to this by the end of next week. |

|

Unfortunately this didn't get done, sorry. We're now on Go 1.19, so I expect this is now obsolete as both supported Go versions have the fix. |

What version of Go are you using (

go version)?Does this issue reproduce with the latest release?

Yes (assuming 1.17.2 is latest release

What operating system and processor architecture are you using (

go env)?go envOutputWhat did you do?

The Kubernetes open source code includes a verification step that performs cross compile validation for a number of target systems. Im not familiar with the code but the README states that it does the following:

https://github.com/kubernetes/kubernetes/blob/master/test/typecheck/README

With go 1.16 and below, these checks could be run on a system with 8GB RAM. With go 1.17.2 this now requires more than double the amount of RAM to avoid out of memory issues. It appears something in go 1.17 is using significantly more memory.

The shell script to run the check is here https://github.com/kubernetes/kubernetes/blob/master/hack/verify-typecheck.sh

Recreating the OOM can be done by pulling down the Kubernetes open source package from https://github.com/kubernetes/kubernetes and running

hack/verify-typecheck.shwith a go 1.17.2 compiler on a Linux VM with 8GB memory.What did you expect to see?

I modified

hack/lib/golang.shto setminimum_go_version=go1.16.0and ran the test with go 1.16.9. The result wasWhat did you see instead?

With go 1.17.2 I get

Id like to be able to let the kubernetes community know whether this is a performance change that can be fixed, or whether folks verifying a kubernetes build will need significantly more memory.

The text was updated successfully, but these errors were encountered: