-

Notifications

You must be signed in to change notification settings - Fork 17.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

runtime: 2-3x memory usage when running in container increase in 1.16 vs 1.15 (MADV_DONTNEED related) #44554

Comments

|

cc @mknyszek |

|

@pitr have you looked at a memory profile to see where the extra memory is being used? note |

|

It's very hard for me to tell whether this is a purely runtime thing just from the information you've provided. My first question is what (in very precise terms) is that graph showing? RSS? RSS+swap? RSS+swap+cache? Some After knowing that, the next thing to determine is whether that extra memory used is actually just stale free memory or fragmentation or something (that's more likely a runtime issue) or whether it's that more objects are alive (this could be a runtime issue, but also maybe a compiler issue, or an issue with a standard library package, or something else). For this purpose, running your application with the environment variable As @seankhliao suggested, looking at a memory profile (specifically one for each of the Go 1.15 and Go 1.16 runs) would be helpful too because if it is actually that more objects are live, then this will tell you which ones it is. Lastly, the most effective thing here (since the difference is so significant) is to actually just start bisecting the Go repository between 1.15 and 1.16 to pinpoint the exact change that caused the issue. I would normally just do that last one myself but alas I need steps to reproduce. I can help you with building the Go toolchain and then building your application with it if it comes to that. |

|

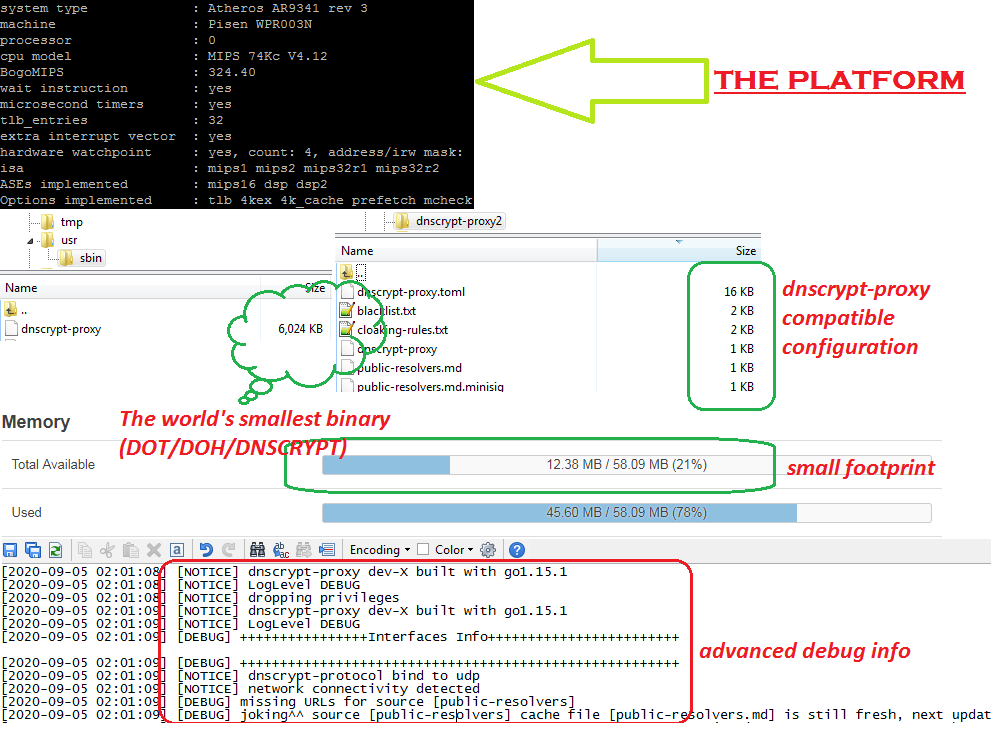

To add to @pitr a bit of environment information: |

|

I can confirm the phenomenon happens to the repique. |

|

I was able to look into both gctrace and pprof (inuse_space). Since I already pinpointed that this issue can be replicated with simply switching First madvdontneed=1 (go1.16) average container_memory_working_set_bytes is 434MB

madvdontneed=0 (go1.16) average container_memory_working_set_bytes is 167MB, stabilized at 130MB after 1 hourFeb 26 13:30:20.055 gc 1 @0.041s 0%: 0.021+0.40+0.028 ms clock, 0.17+0.22/0.46/0.38+0.22 ms cpu, 4->4->0 MB, 5 MB goal, 8 P Feb 26 13:30:20.088 gc 2 @0.072s 2%: 1.1+0.96+0.046 ms clock, 8.9+1.2/0.76/0+0.36 ms cpu, 4->4->1 MB, 5 MB goal, 8 P Feb 26 13:30:20.174 gc 3 @0.160s 1%: 0.039+0.73+0.065 ms clock, 0.31+0/0.71/1.1+0.52 ms cpu, 4->4->2 MB, 5 MB goal, 8 P Feb 26 13:30:20.191 gc 4 @0.171s 1%: 0.038+3.1+0.043 ms clock, 0.30+0/3.1/0.97+0.34 ms cpu, 4->5->4 MB, 5 MB goal, 8 P Feb 26 13:30:20.231 gc 5 @0.213s 1%: 0.033+3.9+0.26 ms clock, 0.26+0/4.1/1.0+2.1 ms cpu, 12->13->9 MB, 13 MB goal, 8 P Feb 26 13:30:20.289 gc 6 @0.271s 1%: 0.027+5.0+0.039 ms clock, 0.22+0/0.75/5.7+0.31 ms cpu, 21->21->17 MB, 22 MB goal, 8 P Feb 26 13:30:20.420 gc 7 @0.399s 1%: 0.077+6.8+0.035 ms clock, 0.61+0/7.4/0.98+0.28 ms cpu, 50->50->37 MB, 51 MB goal, 8 P Feb 26 13:30:20.589 gc 8 @0.569s 0%: 0.029+6.8+0.24 ms clock, 0.23+0/7.1/2.7+1.9 ms cpu, 78->78->58 MB, 79 MB goal, 8 P Feb 26 13:30:20.704 gc 9 @0.684s 0%: 0.086+5.7+0.062 ms clock, 0.68+0.27/8.3/16+0.49 ms cpu, 113->114->80 MB, 116 MB goal, 8 P Feb 26 13:30:20.969 gc 10 @0.945s 0%: 0.046+10+0.020 ms clock, 0.37+0.20/21/50+0.16 ms cpu, 157->157->108 MB, 161 MB goal, 8 P Feb 26 13:31:01.927 gc 11 @41.885s 0%: 0.93+27+0.005 ms clock, 7.4+11/54/82+0.044 ms cpu, 212->212->95 MB, 217 MB goal, 8 P Feb 26 13:31:33.382 gc 12 @73.345s 0%: 0.090+22+0.036 ms clock, 0.72+0/44/86+0.29 ms cpu, 189->189->132 MB, 191 MB goal, 8 P Feb 26 13:31:34.177 gc 13 @74.133s 0%: 0.055+29+0.030 ms clock, 0.44+0.41/59/147+0.24 ms cpu, 259->264->183 MB, 265 MB goal, 8 P Feb 26 13:33:06.916 gc 14 @166.869s 0%: 0.29+32+0.005 ms clock, 2.3+81/65/17+0.047 ms cpu, 358->362->97 MB, 367 MB goal, 8 P Feb 26 13:33:33.629 gc 15 @193.586s 0%: 0.058+29+0.030 ms clock, 0.46+0.80/57/136+0.24 ms cpu, 189->192->135 MB, 194 MB goal, 8 P Feb 26 13:34:33.442 gc 16 @253.405s 0%: 0.14+22+0.031 ms clock, 1.1+0.51/45/108+0.25 ms cpu, 264->267->120 MB, 271 MB goal, 8 P Feb 26 13:35:33.353 gc 17 @313.320s 0%: 0.086+19+0.030 ms clock, 0.68+0/38/102+0.24 ms cpu, 237->237->116 MB, 240 MB goal, 8 P Feb 26 13:36:33.190 gc 18 @373.156s 0%: 0.22+19+0.029 ms clock, 1.7+0/38/99+0.23 ms cpu, 228->228->95 MB, 232 MB goal, 8 P Feb 26 13:37:01.917 gc 19 @401.869s 0%: 0.20+34+0.058 ms clock, 1.6+99/59/0.89+0.46 ms cpu, 186->193->99 MB, 191 MB goal, 8 P Feb 26 13:37:33.534 gc 20 @433.499s 0%: 0.065+21+0.046 ms clock, 0.52+0.33/42/105+0.36 ms cpu, 188->190->117 MB, 198 MB goal, 8 P Feb 26 13:38:33.251 gc 21 @493.217s 0%: 0.15+20+0.036 ms clock, 1.2+0/39/106+0.29 ms cpu, 230->230->104 MB, 234 MB goal, 8 P Feb 26 13:39:08.907 gc 22 @528.871s 0%: 0.45+22+0.005 ms clock, 3.6+22/44/83+0.046 ms cpu, 204->205->94 MB, 209 MB goal, 8 P Feb 26 13:39:33.501 gc 23 @553.462s 0%: 0.12+24+0.028 ms clock, 0.98+0.37/49/118+0.22 ms cpu, 183->186->128 MB, 188 MB goal, 8 P Feb 26 13:40:33.410 gc 24 @613.374s 0%: 0.12+22+0.030 ms clock, 1.0+0.32/44/107+0.24 ms cpu, 249->252->115 MB, 256 MB goal, 8 P Feb 26 13:41:33.252 gc 25 @673.219s 0%: 0.10+19+0.005 ms clock, 0.85+0/38/100+0.044 ms cpu, 230->230->105 MB, 231 MB goal, 8 P Feb 26 13:42:17.925 gc 26 @717.878s 0%: 0.23+33+0.099 ms clock, 1.9+82/66/0+0.79 ms cpu, 205->210->101 MB, 210 MB goal, 8 P Feb 26 13:42:33.441 gc 27 @733.402s 0%: 0.13+25+0.029 ms clock, 1.0+0.48/50/103+0.23 ms cpu, 195->197->136 MB, 202 MB goal, 8 P Feb 26 13:43:06.916 gc 28 @766.869s 0%: 0.42+32+0.007 ms clock, 3.4+77/64/13+0.056 ms cpu, 266->271->98 MB, 273 MB goal, 8 P Feb 26 13:43:33.381 gc 29 @793.344s 0%: 0.071+23+0.050 ms clock, 0.57+0/46/96+0.40 ms cpu, 190->191->134 MB, 196 MB goal, 8 P Feb 26 13:43:45.562 gc 30 @805.528s 0%: 0.10+19+0.006 ms clock, 0.84+0.36/39/114+0.050 ms cpu, 261->261->93 MB, 268 MB goal, 8 P Feb 26 13:44:33.232 gc 31 @853.193s 0%: 0.13+25+0.028 ms clock, 1.0+5.0/46/95+0.22 ms cpu, 189->197->129 MB, 190 MB goal, 8 P Feb 26 13:44:34.041 gc 32 @853.992s 0%: 0.050+35+0.030 ms clock, 0.40+0.49/68/156+0.24 ms cpu, 248->252->170 MB, 259 MB goal, 8 P Feb 26 13:45:33.509 gc 33 @913.467s 0%: 0.091+27+0.039 ms clock, 0.73+0.29/54/135+0.31 ms cpu, 331->334->137 MB, 340 MB goal, 8 P Feb 26 13:46:33.379 gc 34 @973.342s 0%: 0.19+22+0.025 ms clock, 1.5+0.53/44/92+0.20 ms cpu, 267->269->127 MB, 274 MB goal, 8 P Feb 26 13:46:34.171 gc 35 @974.124s 0%: 0.056+32+0.033 ms clock, 0.45+0.79/64/159+0.27 ms cpu, 249->254->181 MB, 255 MB goal, 8 P Feb 26 13:48:15.902 gc 36 @1075.868s 0%: 0.27+19+0.008 ms clock, 2.1+11/38/92+0.067 ms cpu, 354->355->93 MB, 363 MB goal, 8 P Feb 26 13:48:33.479 gc 37 @1093.440s 0%: 0.14+24+0.040 ms clock, 1.1+0.31/48/121+0.32 ms cpu, 183->185->129 MB, 187 MB goal, 8 P Feb 26 13:49:33.604 gc 38 @1153.564s 0%: 0.088+26+0.029 ms clock, 0.71+0.30/51/113+0.23 ms cpu, 252->255->118 MB, 259 MB goal, 8 P Feb 26 13:50:33.385 gc 39 @1213.350s 0%: 0.15+21+0.029 ms clock, 1.2+0/42/104+0.23 ms cpu, 242->243->117 MB, 243 MB goal, 8 P Feb 26 13:51:33.251 gc 40 @1273.217s 0%: 0.10+20+0.034 ms clock, 0.83+0/40/105+0.27 ms cpu, 232->232->105 MB, 234 MB goal, 8 P Feb 26 13:52:17.926 gc 41 @1317.878s 0%: 0.28+33+0.10 ms clock, 2.2+100/66/0+0.83 ms cpu, 205->210->101 MB, 210 MB goal, 8 P Feb 26 13:52:33.556 gc 42 @1333.512s 0%: 0.13+29+0.026 ms clock, 1.1+0.52/58/144+0.20 ms cpu, 194->198->140 MB, 202 MB goal, 8 P Feb 26 13:53:33.546 gc 43 @1393.503s 0%: 0.068+29+0.079 ms clock, 0.54+0.34/57/139+0.63 ms cpu, 273->274->139 MB, 281 MB goal, 8 P Feb 26 13:54:33.804 gc 44 @1453.764s 0%: 0.12+26+0.031 ms clock, 1.0+0.29/51/128+0.25 ms cpu, 272->275->135 MB, 279 MB goal, 8 P Feb 26 13:55:33.645 gc 45 @1513.604s 0%: 0.087+26+0.038 ms clock, 0.70+0.53/53/131+0.30 ms cpu, 263->266->132 MB, 270 MB goal, 8 P Feb 26 13:56:33.456 gc 46 @1573.417s 0%: 0.18+24+0.029 ms clock, 1.4+0.27/49/121+0.23 ms cpu, 259->262->125 MB, 265 MB goal, 8 P Feb 26 13:57:33.452 gc 47 @1633.415s 0%: 0.081+22+0.031 ms clock, 0.65+0.39/44/109+0.25 ms cpu, 245->248->118 MB, 251 MB goal, 8 P Feb 26 13:58:33.201 gc 48 @1693.160s 0%: 0.13+26+0.017 ms clock, 1.0+0/52/92+0.13 ms cpu, 232->232->107 MB, 237 MB goal, 8 P Feb 26 13:58:33.530 gc 49 @1693.483s 0%: 0.12+33+0.033 ms clock, 1.0+5.0/66/127+0.27 ms cpu, 214->217->180 MB, 215 MB goal, 8 P Feb 26 13:59:33.292 gc 50 @1753.243s 0%: 0.13+35+0.037 ms clock, 1.0+22/60/106+0.29 ms cpu, 357->358->129 MB, 360 MB goal, 8 P Feb 26 13:59:33.608 gc 51 @1753.544s 0%: 0.74+48+0.056 ms clock, 5.9+50/97/133+0.45 ms cpu, 270->274->219 MB, 271 MB goal, 8 P Feb 26 14:00:33.280 gc 52 @1813.213s 0%: 0.14+53+0.031 ms clock, 1.1+19/55/79+0.24 ms cpu, 425->441->137 MB, 438 MB goal, 8 P Feb 26 14:00:33.575 gc 53 @1813.514s 0%: 0.19+47+0.037 ms clock, 1.5+23/92/116+0.30 ms cpu, 274->277->215 MB, 275 MB goal, 8 P Feb 26 14:01:33.254 gc 54 @1873.213s 0%: 0.079+27+0.028 ms clock, 0.63+15/43/92+0.23 ms cpu, 412->420->129 MB, 431 MB goal, 8 P Feb 26 14:01:34.192 gc 55 @1874.146s 0%: 0.078+31+0.035 ms clock, 0.62+0.23/62/155+0.28 ms cpu, 250->253->171 MB, 259 MB goal, 8 P Feb 26 14:02:33.415 gc 56 @1933.374s 0%: 0.15+26+0.046 ms clock, 1.2+0.36/52/115+0.37 ms cpu, 333->336->133 MB, 342 MB goal, 8 P Feb 26 14:03:01.928 gc 57 @1961.878s 0%: 0.29+35+0.006 ms clock, 2.3+64/70/26+0.051 ms cpu, 261->266->100 MB, 267 MB goal, 8 P Feb 26 14:03:33.401 gc 58 @1993.366s 0%: 0.082+21+0.038 ms clock, 0.66+0.38/43/106+0.30 ms cpu, 195->198->130 MB, 201 MB goal, 8 P Feb 26 14:03:34.221 gc 59 @1994.174s 0%: 0.052+34+0.030 ms clock, 0.42+0.29/67/168+0.24 ms cpu, 254->258->186 MB, 260 MB goal, 8 P Feb 26 14:04:33.532 gc 60 @2053.488s 0%: 0.23+30+0.024 ms clock, 1.8+21/59/125+0.19 ms cpu, 395->396->182 MB, 396 MB goal, 8 P Feb 26 14:05:33.273 gc 61 @2113.239s 0%: 0.10+20+0.045 ms clock, 0.83+0/40/102+0.36 ms cpu, 361->361->137 MB, 365 MB goal, 8 P Feb 26 14:05:33.652 gc 62 @2113.599s 0%: 0.10+39+0.044 ms clock, 0.84+0.59/77/117+0.35 ms cpu, 268->273->208 MB, 275 MB goal, 8 P Feb 26 14:06:33.454 gc 63 @2173.414s 0%: 0.19+26+0.044 ms clock, 1.5+0.31/52/125+0.35 ms cpu, 407->410->122 MB, 417 MB goal, 8 P Feb 26 14:07:33.221 gc 64 @2233.184s 0%: 0.10+22+0.014 ms clock, 0.82+0/44/103+0.11 ms cpu, 245->245->121 MB, 246 MB goal, 8 P Feb 26 14:07:33.990 gc 65 @2233.944s 0%: 0.053+32+0.029 ms clock, 0.42+0.29/60/141+0.23 ms cpu, 237->241->164 MB, 243 MB goal, 8 P Feb 26 14:08:33.290 gc 66 @2293.250s 0%: 0.12+26+0.021 ms clock, 0.98+6.7/51/88+0.17 ms cpu, 329->329->133 MB, 330 MB goal, 8 P Feb 26 14:08:33.596 gc 67 @2293.538s 0%: 0.15+44+0.054 ms clock, 1.2+17/83/121+0.43 ms cpu, 274->278->219 MB, 275 MB goal, 8 P Feb 26 14:09:33.373 gc 68 @2353.338s 0%: 0.11+20+0.031 ms clock, 0.91+0/41/101+0.24 ms cpu, 430->431->117 MB, 439 MB goal, 8 P Feb 26 14:10:33.207 gc 69 @2413.172s 0%: 0.12+21+0.027 ms clock, 1.0+0.069/41/104+0.22 ms cpu, 230->230->99 MB, 235 MB goal, 8 P Feb 26 14:11:01.932 gc 70 @2441.877s 0%: 0.24+40+0.015 ms clock, 1.9+85/77/0+0.12 ms cpu, 193->199->101 MB, 198 MB goal, 8 P Feb 26 14:11:33.422 gc 71 @2473.388s 0%: 0.076+20+0.039 ms clock, 0.61+0.53/41/101+0.31 ms cpu, 195->197->128 MB, 202 MB goal, 8 P Feb 26 14:11:40.919 gc 72 @2480.870s 0%: 0.14+35+0.037 ms clock, 1.1+1.0/70/168+0.30 ms cpu, 251->251->148 MB, 257 MB goal, 8 P Feb 26 14:13:01.915 gc 73 @2561.869s 0%: 0.28+31+0.026 ms clock, 2.3+109/57/0+0.20 ms cpu, 288->294->98 MB, 296 MB goal, 8 P Feb 26 14:13:33.490 gc 74 @2593.452s 0%: 0.072+23+0.040 ms clock, 0.58+0.39/47/118+0.32 ms cpu, 190->192->126 MB, 196 MB goal, 8 P Feb 26 14:14:33.406 gc 75 @2653.368s 0%: 0.13+24+0.028 ms clock, 1.1+0.32/47/104+0.22 ms cpu, 246->250->112 MB, 253 MB goal, 8 P Feb 26 14:15:33.178 gc 76 @2713.144s 0%: 0.091+20+0.039 ms clock, 0.72+0.29/39/102+0.31 ms cpu, 220->220->96 MB, 225 MB goal, 8 P Feb 26 14:16:06.915 gc 77 @2746.869s 0%: 0.27+32+0.029 ms clock, 2.1+89/63/8.6+0.23 ms cpu, 188->193->98 MB, 193 MB goal, 8 P Feb 26 14:16:33.652 gc 78 @2773.614s 0%: 0.19+24+0.032 ms clock, 1.5+0.49/48/119+0.26 ms cpu, 189->191->121 MB, 196 MB goal, 8 P Feb 26 14:17:33.375 gc 79 @2833.340s 0%: 0.096+21+0.031 ms clock, 0.77+0/41/105+0.25 ms cpu, 238->238->117 MB, 243 MB goal, 8 P Feb 26 14:18:33.049 gc 80 @2893.011s 0%: 0.14+24+0.038 ms clock, 1.1+0.25/47/101+0.30 ms cpu, 230->230->100 MB, 235 MB goal, 8 P Feb 26 14:18:33.460 gc 81 @2893.421s 0%: 0.057+25+0.071 ms clock, 0.46+0.47/49/103+0.57 ms cpu, 196->199->150 MB, 201 MB goal, 8 P Feb 26 14:18:33.995 gc 82 @2893.938s 0%: 0.044+43+0.042 ms clock, 0.35+0.44/85/199+0.34 ms cpu, 293->298->186 MB, 300 MB goal, 8 P Feb 26 14:19:33.374 gc 83 @2953.336s 0%: 0.13+24+0.034 ms clock, 1.1+0.32/47/99+0.27 ms cpu, 365->366->134 MB, 372 MB goal, 8 P Feb 26 14:19:46.903 gc 84 @2966.870s 0%: 0.38+19+0.005 ms clock, 3.0+0.74/38/109+0.046 ms cpu, 262->262->93 MB, 268 MB goal, 8 P Feb 26 14:20:33.226 gc 85 @3013.179s 0%: 0.14+32+0.064 ms clock, 1.1+13/63/101+0.51 ms cpu, 191->193->128 MB, 192 MB goal, 8 P Feb 26 14:20:33.569 gc 86 @3013.519s 0%: 0.15+36+0.052 ms clock, 1.2+9.0/66/107+0.41 ms cpu, 260->264->205 MB, 261 MB goal, 8 P Feb 26 14:21:33.371 gc 87 @3073.336s 0%: 0.11+21+0.038 ms clock, 0.89+0/42/105+0.30 ms cpu, 409->410->118 MB, 411 MB goal, 8 P Feb 26 14:22:17.925 gc 88 @3117.878s 0%: 0.27+32+0.056 ms clock, 2.2+102/61/0+0.45 ms cpu, 229->234->101 MB, 236 MB goal, 8 P Feb 26 14:22:33.457 gc 89 @3133.419s 0%: 0.15+23+0.23 ms clock, 1.2+0.30/46/109+1.9 ms cpu, 195->198->136 MB, 203 MB goal, 8 P Feb 26 14:23:02.902 gc 90 @3162.869s 0%: 0.13+19+0.007 ms clock, 1.0+1.4/38/107+0.058 ms cpu, 266->266->93 MB, 273 MB goal, 8 P Feb 26 14:23:33.508 gc 91 @3193.465s 0%: 0.082+28+0.031 ms clock, 0.65+0.48/56/136+0.24 ms cpu, 182->185->131 MB, 187 MB goal, 8 P Feb 26 14:24:33.418 gc 92 @3253.381s 0%: 0.12+23+0.030 ms clock, 1.0+0.44/45/113+0.24 ms cpu, 256->258->120 MB, 262 MB goal, 8 P Feb 26 14:25:14.605 gc 93 @3294.572s 0%: 0.090+19+0.006 ms clock, 0.72+2.9/37/104+0.054 ms cpu, 234->234->94 MB, 240 MB goal, 8 P Feb 26 14:25:33.205 gc 94 @3313.167s 0%: 0.072+24+0.036 ms clock, 0.58+0.49/47/99+0.29 ms cpu, 183->199->123 MB, 188 MB goal, 8 P Feb 26 14:25:33.632 gc 95 @3313.588s 0%: 0.053+30+0.028 ms clock, 0.43+0.30/60/143+0.23 ms cpu, 235->237->160 MB, 247 MB goal, 8 P Feb 26 14:26:17.941 gc 96 @3357.888s 0%: 0.49+38+0.038 ms clock, 3.9+81/75/24+0.30 ms cpu, 311->315->105 MB, 320 MB goal, 8 P Feb 26 14:26:38.921 gc 97 @3378.870s 0%: 0.34+36+0.029 ms clock, 2.7+27/71/104+0.23 ms cpu, 205->207->126 MB, 210 MB goal, 8 P Feb 26 14:27:33.425 gc 98 @3433.388s 0%: 0.10+23+0.040 ms clock, 0.85+0.32/45/108+0.32 ms cpu, 246->248->133 MB, 252 MB goal, 8 P Feb 26 14:28:06.910 gc 99 @3466.875s 0%: 0.84+21+0.007 ms clock, 6.7+4.9/42/102+0.059 ms cpu, 260->260->95 MB, 267 MB goal, 8 P Feb 26 14:28:33.407 gc 100 @3493.372s 0%: 0.13+21+0.038 ms clock, 1.1+0.33/41/102+0.31 ms cpu, 185->187->112 MB, 190 MB goal, 8 P Feb 26 14:29:25.902 gc 101 @3545.869s 0%: 0.27+18+0.007 ms clock, 2.2+6.6/37/101+0.056 ms cpu, 219->220->93 MB, 225 MB goal, 8 P Feb 26 14:29:33.733 gc 102 @3553.694s 0%: 0.056+24+0.028 ms clock, 0.45+0.53/48/116+0.23 ms cpu, 182->184->142 MB, 187 MB goal, 8 P Feb 26 14:30:17.929 gc 103 @3597.885s 0%: 0.20+29+0.006 ms clock, 1.6+13/57/86+0.049 ms cpu, 277->278->98 MB, 284 MB goal, 8 P Feb 26 14:30:33.545 gc 104 @3613.506s 0%: 0.12+25+0.042 ms clock, 0.96+0.35/49/120+0.33 ms cpu, 192->194->126 MB, 197 MB goal, 8 P Feb 26 14:31:33.250 gc 105 @3673.215s 0%: 0.092+21+0.006 ms clock, 0.73+0.46/42/109+0.052 ms cpu, 252->252->105 MB, 253 MB goal, 8 P Feb 26 14:31:43.118 gc 106 @3683.073s 0%: 0.12+31+0.032 ms clock, 1.0+2.3/61/143+0.25 ms cpu, 206->206->124 MB, 211 MB goal, 8 P Feb 26 14:32:17.916 gc 107 @3717.876s 0%: 0.23+26+0.096 ms clock, 1.9+100/51/0+0.77 ms cpu, 242->246->99 MB, 249 MB goal, 8 P Feb 26 14:32:33.577 gc 108 @3733.528s 0%: 0.15+35+0.039 ms clock, 1.2+0.084/70/135+0.31 ms cpu, 192->197->138 MB, 199 MB goal, 8 P Feb 26 14:33:33.402 gc 109 @3793.365s 0%: 0.084+23+0.035 ms clock, 0.67+0.22/47/106+0.28 ms cpu, 269->271->133 MB, 276 MB goal, 8 P Feb 26 14:33:34.197 gc 110 @3794.151s 0%: 0.054+32+0.033 ms clock, 0.43+0.48/64/160+0.26 ms cpu, 260->264->190 MB, 267 MB goal, 8 P Feb 26 14:34:38.918 gc 111 @3858.871s 0%: 0.25+33+0.029 ms clock, 2.0+21/66/118+0.23 ms cpu, 371->372->125 MB, 380 MB goal, 8 P Feb 26 14:35:33.413 gc 112 @3913.375s 0%: 0.12+24+0.097 ms clock, 1.0+0.30/47/100+0.78 ms cpu, 245->247->132 MB, 251 MB goal, 8 P Feb 26 14:36:06.912 gc 113 @3946.874s 0%: 0.82+24+0.006 ms clock, 6.6+31/47/69+0.052 ms cpu, 257->259->95 MB, 264 MB goal, 8 P Feb 26 14:36:33.382 gc 114 @3973.343s 0%: 0.22+24+0.040 ms clock, 1.7+0.63/47/106+0.32 ms cpu, 201->202->134 MB, 202 MB goal, 8 P Feb 26 14:36:43.923 gc 115 @3983.870s 0%: 0.22+39+0.028 ms clock, 1.7+2.2/78/187+0.22 ms cpu, 261->261->148 MB, 268 MB goal, 8 P Feb 26 14:37:59.107 gc 116 @4059.073s 0%: 0.10+20+0.004 ms clock, 0.86+0.32/40/102+0.038 ms cpu, 289->290->94 MB, 297 MB goal, 8 P Feb 26 14:38:21.912 gc 117 @4081.869s 0%: 0.25+28+0.008 ms clock, 2.0+60/56/34+0.070 ms cpu, 183->186->96 MB, 188 MB goal, 8 P Feb 26 14:38:33.355 gc 118 @4093.316s 0%: 0.089+24+0.24 ms clock, 0.71+0/48/91+1.9 ms cpu, 198->199->134 MB, 199 MB goal, 8 P Feb 26 14:38:36.570 gc 119 @4096.519s 0%: 0.097+37+0.028 ms clock, 0.78+0.36/74/181+0.22 ms cpu, 262->262->147 MB, 269 MB goal, 8 P Feb 26 14:39:16.107 gc 120 @4136.073s 0%: 0.10+20+0.005 ms clock, 0.81+2.0/40/110+0.045 ms cpu, 288->288->94 MB, 295 MB goal, 8 P Heap size at GC start, at GC end, and live heap are more or less the same, maybe with Looking at I am now convinced that the issue is with how I am measuring memory (cadvisor's |

|

Cadvisor's container_working_set_bytes appears to report on the cgroup I guess that MADV_DONTNEED and MADV_FREE affect the |

|

It is odd that The process that returns memory to the OS (the "scavenger") paces itself according to how long the Though, there are two holes in this theory:

Alternatively, there's a bug in the scavenger's pacing causing this. @pitr Could you also please provide a log of |

|

@mknyszek 1. is unlikely because @pitr runs the workload in our kubernetes infrastructure and I just checked soft limits seem not to be set, for example in one container I see this: |

|

We had also ran into this issue. We resolved it by moving back to MADV_FREE. I'm happy to provide more data if it is helpful. |

|

I'm not sure if this is relevant, but I'll share it in case it is. I'm seeing situations in containerized apps where the RAM usage reported by the app itself (by Golang's So my tentative theory is that, for some kinds of apps, working-set under-reports "true RAM usage". I can imagine how that's possible, since apparently working set only includes pages that the kernel thinks are recently used. If an app has some pages that it hasn't used in a while, they won't be counted towards the working set. The bit I don't understand, and the reason I'm not 100% sure of this theory, is that switching to MADV_DONTNEED makes the under-reporting go away. (I.e. I appears as if MADV_DONTNEED didn't make the app use more memory; it just made container_working_set_bytes report the usage more accurately.) But I have no idea why switching to MADV_DONTNEED would make that happen. Is it somehow remedying the under-reporting? Or is it actually causing additional allocations that just happen to approiximately balance out the under-reporting (even though the under-reporting continues)? Or is it just introducing a new and different reporting error, as suggested by some of the replies above. I don't know. |

|

In istio/istio#33569 (comment), which may or may not be the same issue as here, I am seeing that with go 1.16 container_working_set_bytes==container_usage_bytes |

That's a bit of an oversimplification. "Recently used" just means "the application needs it" and the kernel tends to be pretty conservative about this, unless you have some very aggressive swapping policy turned on (if that's an option that exists, even). On Linux, what this looks like is:

This makes sense to me. In general, |

|

@sganapathy1312 That's somewhat surprising to me. If you can acquire a |

So do I. So it's interesting that in my tests the gctrace figures are higher than container_working_set_bytes. |

|

@JohnRusk Ah. I see. My bad for misreading. That can happen for similar reasons. If you make a large allocation but never actually touch that memory on Linux, then it'll never get paged in. Linux might not*** count that in RSS. *** It really depends. If it contains pointers such that the GC touches that memory, it could get paged in. If the Go memory allocator allocates space for it using memory that's already paged in, then it will of course count. If you want to run an experiment, try a small program that creates a 1 GiB byte slice (e.g. |

|

I have the same issue with TiDB running in docker container but the memory seem like only quickly increase in the first 4GB (the system has 16GB memory), after that the memory usage is quite stable at 4GB while pprof/heap reported only hundred of MB. I'm testing with |

What version of Go are you using (

go version)?Does this issue reproduce with the latest release?

Yes, in 1.16

What operating system and processor architecture are you using (

go env)?go envOutputWhat did you do?

After upgrading our application to go 1.16, it started using 2-3x more memory. Unfortunately I cannot provide steps to replicate this, as it is an internal piece of software.

What did you expect to see?

Expected to see same memory usage profile or better (due to

MADV_DONTNEEDbeing enabled).What did you see instead?

2 series with low (~50mb) memory usage are generated by binaries built with go 1.15. Others are all 1.16. The last series is built with

GODEBUG=madvdontneed=0(which doesn't seem to make any difference)$ cat go.mod module ... go 1.16 require ( github.com/go-ole/go-ole v1.2.5 // indirect github.com/go-redis/redis/v7 v7.4.0 github.com/golang/protobuf v1.4.3 // indirect github.com/gorilla/mux v1.8.0 github.com/magefile/mage v1.11.0 // indirect github.com/matryer/is v1.4.0 github.com/opentracing-contrib/go-stdlib v1.0.0 github.com/opentracing/opentracing-go v1.2.0 github.com/rcrowley/go-metrics v0.0.0-20201227073835-cf1acfcdf475 github.com/shirou/gopsutil v3.21.1+incompatible // indirect github.com/sirupsen/logrus v1.8.0 golang.org/x/net v0.0.0-20210220033124-5f55cee0dc0d // indirect golang.org/x/oauth2 v0.0.0-20210220000619-9bb904979d93 golang.org/x/sys v0.0.0-20210220050731-9a76102bfb43 // indirect golang.org/x/text v0.3.5 // indirect google.golang.org/appengine v1.6.7 // indirect google.golang.org/genproto v0.0.0-20210219173056-d891e3cb3b5b // indirect google.golang.org/grpc v1.35.0 // indirect gopkg.in/yaml.v2 v2.2.5 // indirect )The text was updated successfully, but these errors were encountered: