New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

proposal: database/sql: use a stack-based instead of a queue-based approach for connection pool #43173

Comments

|

@agnivade I'm not aware of any initial design docs on this aspect of database/sql. It is my impression when Brad created the first version of this his intent was was to make the simplest implementation. How large is the impact on the code base? In a v2, I would really like to separate out the pool implementation, though have a default pool. |

My MVP implementation is literally a one-line change. And with the I don't expect it to be more than a 20 line change. Unless there are other things which I am missing at the moment. |

Isn't https://golang.org/pkg/database/sql/#DB.SetConnMaxIdleTime designed expressly for this use case? For the record I think it would be a grave mistake to give up the load balancing property. |

|

As I mentioned above, due to the round-robin property of choosing connections, a connection never really gets a chance to be idle, and hence the idle timeout never hits. Therefore, the connection doesn't drop until In the graphs that I attached, a maxIdleTime of 5 minutes was already set, but still as you can see, nothing happens. It is this problem that the proposal tries to improve. This is more acutely felt in a multi-tenant database, where connections are at a premium. The load balancing property is indeed something that breaks with this proposal. But it's not a either-or choice. Choosing an appropriate idletime and lifetime will still allow an administrator to load balance. If the admin wants to spread the load amongst a large number of connections, they can set a large idle time to handle spikes better. But if number of connections is scarce, then setting a low idle time should reduce the number. Currently, as things are, reducing the idle time effectively is a noop in steady state usage. It's a matter of choosing the right tradeoff of course. My proposal is that this change allows more a more intuitive implementation of the connection pool that the user expects, and which can be tuned according to their needs. |

|

Round-robin works well for load-balanced database clusters. Idle time control is not sufficient to ensure load distribution, because the stack approach causes the nodes with connections at the top of the stack to get the majority of the queries. What you would need is a group of stacks where load is distributed round-robin, with the group size being equal to a multiple of the number of nodes in the cluster. |

|

@agnivade sorry, you're right. I missed that bit. I understand the problem now. Even with that, removing the load balancing property is still something I strongly wish we will not do. It's going to be a surprising change, that will likely cause pain to users with certain usage patterns (full disclosure: including us). I would be fine though making it configurable so that people can opt-in to the new behavior if needed. Or, even better, do what @ulikunitz is suggesting (with the group size being set by default to the number of endpoints that the address resolves to). |

|

Issue #31708 (database/sql: connection pool was originally FIFO, is now random, but should be LIFO) looks related. |

|

Yes that solves the problem described by ensuring that connections can be cleared down as soon as possible. |

|

Perfect, thanks @stevenh ! |

|

This proposal has been declined as retracted. |

What version of Go are you using (

go version)?Problem statement

Currently, the

database/sqlimplementation of connection pool uses a queue based approach where it pops a connection from the front of thefreeConnslice while using it, and then adds it at the end of the slice when putting it back to the pool.There are some tradeoffs with this approach. The current approach basically does a round-robin of trying to use all connections in the pool, so a single connection never gets a chance to remain idle for a long time. However, if we always push and pop from the top of the slice, then we always work with the recent connections, allowing the older connections to expire and get reaped.

The new approach allows us to use the set of connections more efficiently, allowing unnecessary connections to expire faster.

Analysis

The main tradeoff that I see is that the old approach load balances queries amongst all connections. So if the user has a very good estimate of the steady-state number of connections required for the app, then the approach does a good job of using all the connections, and avoids the connection creation overhead.

However, if the application can have temporary spikes, or we don't know up-front what can be the max number of connections, and we want to keep some amount of buffer space in case of spikes, then a small spike can use upto max connections, and then it will never go down, until it hits the

ConnMaxLifetime. So the application would be potentially wasting a lot of connections, even if it can do with a lot less.Another advantage of the stack-based approach is that since we would always be operating from the top of the stack, in

connectionCleanerRunLocked, the slice basically remains sorted byc.returnedAtandc.createdAt. So we can just iterate from the back of the slice and stop when we have passedexpiredSince. This locks the cleaner for a shorter duration and scales better, essentially reducing the lock time complexity from O(n) to O(k).Real-life necessity and demo

The need for this came up when I was investigating why the Mattermost app's DB connection usage does not come down when the number of queries have come down. Mattermost is a typical chat application where users will login in the morning, do some work throughout the day, and then logoff.

This effectively means that there will be a connection spike at the start of the day, and then it will wear off. But with the current implementation, the issue is that, even when the RPS drop off, the connection count doesn't drop until the

ConnMaxLifetimehits, which is a significantly larger time than the idle timeout.This a more acute problem in a multi-tenant database where we want to pack as many customers as possible in a single DB.

The following graph shows good improvement when I made the change, and ran a load test again. (The change is essentially a one-line change which pushes to the front of the slice instead of appending to the back).

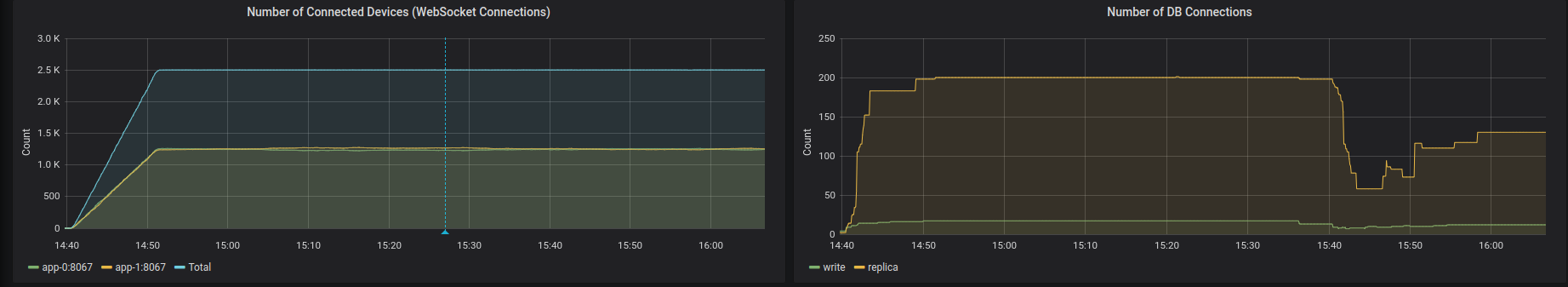

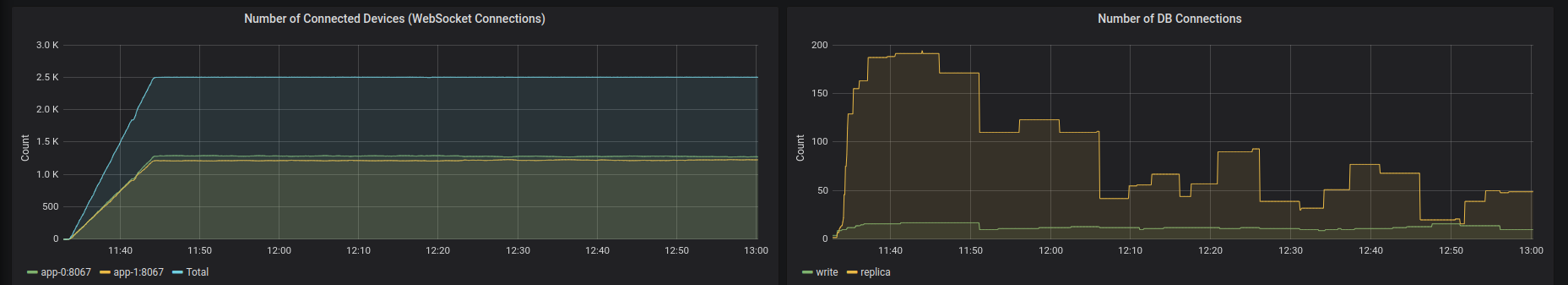

Old: We can see that the connections doesn't drop off until

ConnMaxLifetime.New: We can see that the connections nicely goes down as the idle timeout hits.

Wondering if there has been any thoughts given to this, and using a queue-based approach was a deliberate design decision?

/cc @kardianos

The text was updated successfully, but these errors were encountered: