-

Notifications

You must be signed in to change notification settings - Fork 17.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

runtime: apparent false-positive race report for a buffered channel after CL 220419 #42598

Comments

This comment has been minimized.

This comment has been minimized.

|

I've spent some time analyzing the effect of CL 220419 at the time and I concluded it's perfectly in line with MM.

This is true, but this relation does not seem to be transitive. Namely, if there are more than C operations, kth receive HB k+Cth send, but it does not HB 2*k+Cth send. So when the code does: It only synchronizes with the completion of the last cap(sem) operations, but not with all the previous ones. |

This comment has been minimized.

This comment has been minimized.

|

@dvyukov If I understand you correctly, that sounds like a bug in the memory model. Perhaps the memory model ought to say that the kth receive on a channel with capacity C happens before the k+Cth+N send from the channel completes, where N is any non-negative integer. There is no necessary relationship between sends, of course. But with capacity C, a receive may happen concurrently with up to (but not including) C sends, but it happens before C sends complete, or C+1 sends complete, or C+2 sends complete, etc. |

|

@dvyukov, in this case we should be able to prove that all previous receives happen before the final send by induction, because all sends on the channel occur in the same goroutine.

|

|

@ianlancetaylor, I think the terms “kth receive” and “k+Cth send” are confusing and perhaps misleading here. Identifying the “kth receive” requires a total order among the receives, but the point of HB is that we may only have a partial order. A more precise definition might be more like: “If a send on a channel with capacity C happens before k ≥ C other sends on the channel, then the receive corresponding to the first send happens before the last of those k+1 sends completes.” |

Good question.

This feels a bit fishy to me. It seems we are trying to compensate for broken transitivity, where fixing transitivity would cleaner. -The kth receive on a channel with capacity C happens before the k+Cth send from that channel completes. +The kth receive completion on a buffered channel with capacity C happens before the k+Cth send. It does not generalize the rule for unbuffered channels anymore: the use of send/recv and send completion/recv completion is swapped. But breaking generalization by introducing new custom rules also feels fishy to me :) |

Good point. @dfava where is the flaw now? Also now I wonder if we are dealing with one issue or two :) There seems to be a bug in race detector implementation, but also do we still want to fix the memory model so that similar synchronization is provided even when sends do not happen in the same goroutine? For ListModules is seems inherent that all sends happen in the same goroutine (?). |

|

In this case it actually is important that the initial sends happen before the final loop, so I would be wary about strengthening the model too much. If the initial sends were moved into the background goroutines, it would be possible for that final channel-fill loop to execute before the background goroutines, causing them to leak (blocked on the sends) instead of execute. That wouldn't be a data race, but only because the mutations would no longer happen at all! |

|

@dvyukov, also note that the phrasing I suggested in #42598 (comment) does continue to generalize to the unbuffered case. |

|

Given that we're coming up against the beta, should we revert CL 220419? |

|

Yes, I think we need to rollback for now and add this pattern as a new race test. False positives are much worse than false negatives. |

|

Change https://golang.org/cl/271946 mentions this issue: |

|

I sent a revert CL: https://golang.org/cl/271946. |

|

Sorry I wasn't receiving emails about this thread until the change was reverted just now. I haven't had a chance to read it, but I will soon and can comment. |

|

For what it's worth, the revert CL is not yet committed. A simple roll forward fix is still possible, but it would have to happen very very soon. |

|

Is the failure intermittent (or "somewhat deterministic")? I'm not able to reproduce it on commit 5e58ae4. Seems like there has been some refactoring around modules. I can't find "reportRetractions" in the source tree. Seems like the function has recently been removed and it used to live in @bcmills , is the race still reported after this recent refactoring? (I haven't had a chance to fully digest the discussion above, just going piece by piece from the beginning. I agree on reverting the CL so we can drill down into this carefully. I doubt there is a "bug" in memory model specification; more likely the bug is on my code, or on the code where the race is being reported) |

|

Also, I vaguely recall an earlier discussion on how the race detector treats differently channels whose elements are size zero... Perhaps that special treatment maters now. |

Good point! I assume for I think we could do: |

|

I agree, we could put a check for elemsize. It's nice and simple, and it doesn't force us to revisit the assumptions made about channels of elemsize==0 right now. I'm happy that the memory model specification is fine as it is, and I also see that the code in ListModules is correct. I also think that my patch is not wrong :) though it did expose the issue with elemsize==0, and, in hindsight, I should have accounted for that when testing. I'll spend a bit of time thinking about what exactly happens when elemsize==0 interacts with racereleaseacquire(). |

The failure is intermittent. There have so far been at least three failures in TryBots and one on the build dashboard (2020-11-14T17:24:37-92c732e/linux-amd64-race). |

My understanding is that previously HB was completely cumulative, so the fact that the same address was shared between unrelated elements did not matter (we only get more false negatives). But racereleaseacquire is not cumulative, it always drops everything that was there before, so sharing breaks. |

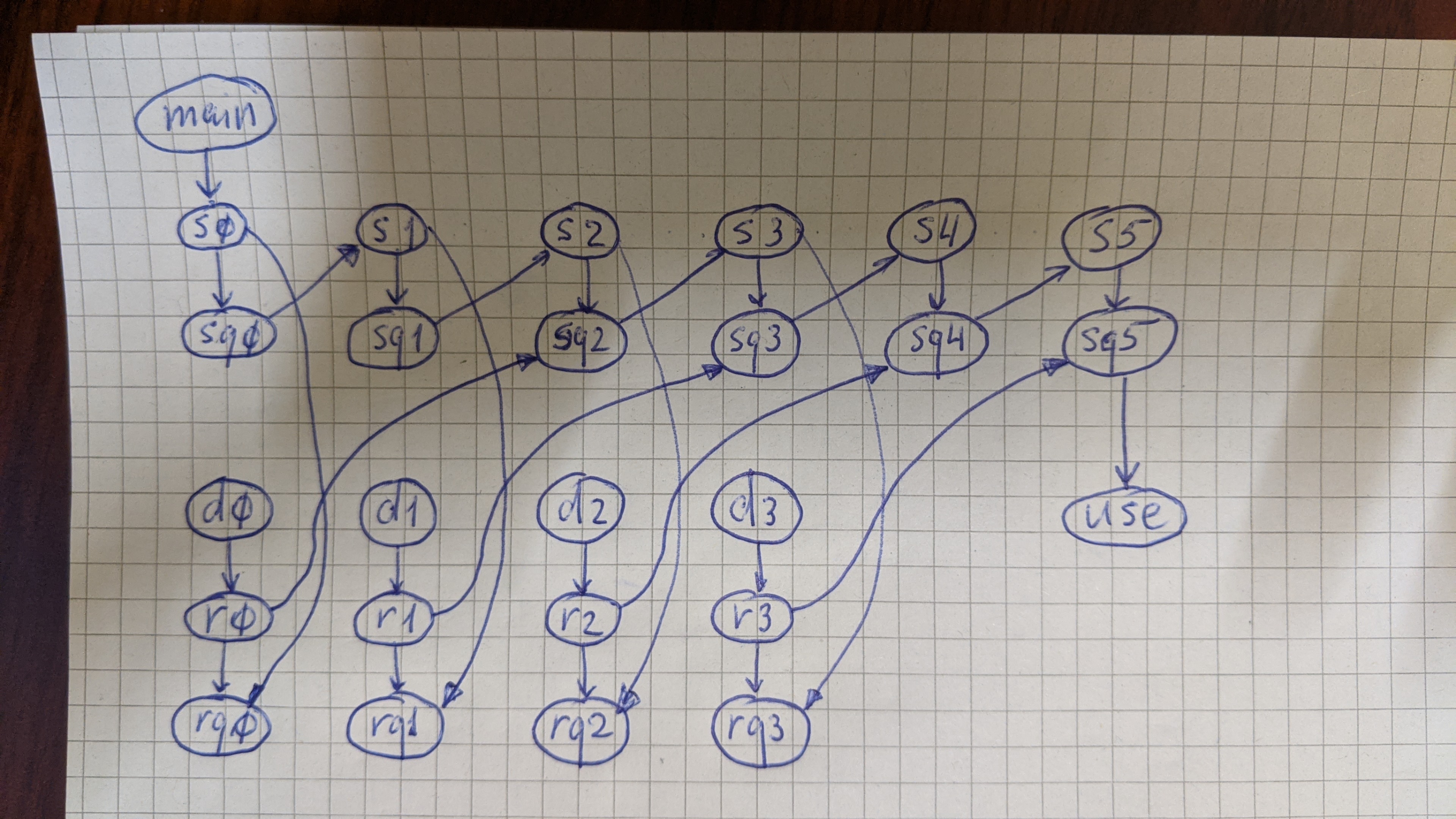

You are absolutely right. Here is in a bit more detail, perhaps it's nice to document. I'll represent each entry in the channel's buffer as B0 and B1. The end state is, T0 is properly synchronized with T1 and T2: T0 "knows" of both T1's and T2's writes to X and Y. However, we currently model channels whose elmsize==0 with one buffer entry, so only B0 and no B1. The picture then looks like: In the end state now, T0 is unaware of one of the writes, so a race is flagged. |

|

It seems like we are able to keep CL 220419 and add the check that Dmitry suggested. Let me know if you would like me to do it, or if you prefer to do it yourselves (since you are probably faster at it than me, given your familiarity with the flow and my lack of access to buildbot and etc). I'm happy either way! |

|

Whoever is doing it, just please add some comments/references and tests ;) |

:) Is it possible to force a specific scheduling of goroutines so we hit the scenario exactly? Maybe using internals/unsafe/something? |

|

(I'm working on a patch) |

|

Change https://golang.org/cl/271987 mentions this issue: |

|

I submitted a patch which included the check for elemsize, documentation, and a test. |

I got the following race report on CL 258758.

(https://storage.googleapis.com/go-build-log/04d98a9c/linux-amd64-race_596e00e8.log)

The reported race is for a function that uses an N-buffered channel as a semaphore. The function sends to the channel (from the main goroutine) to acquire a semaphore token, and receives from the channel (in a background goroutine) to release the token. Before the function returns, it sends N additional tokens to the channel to ensure that all of the background goroutines have finished.

I believe that this pattern is explicitly covered by the memory model:

This is the first race we've seen reported for this code, and the timing w.r.t. CL 220419, which changes the semantics of channels under the race detector, seems suspicious to me.

The text was updated successfully, but these errors were encountered: