New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

runtime: test timeouts / deadlocks on NetBSD after CL 232298 #42515

Comments

|

2020-10-14T08:05:58-fc3a6f4/netbsd-amd64-9_0 has similar symptoms from before CL 232298, but it's the only such occurrence, and the failure rate is markedly higher since then. |

|

This seems to be reproducible on gomote (~20% of the time) with: |

|

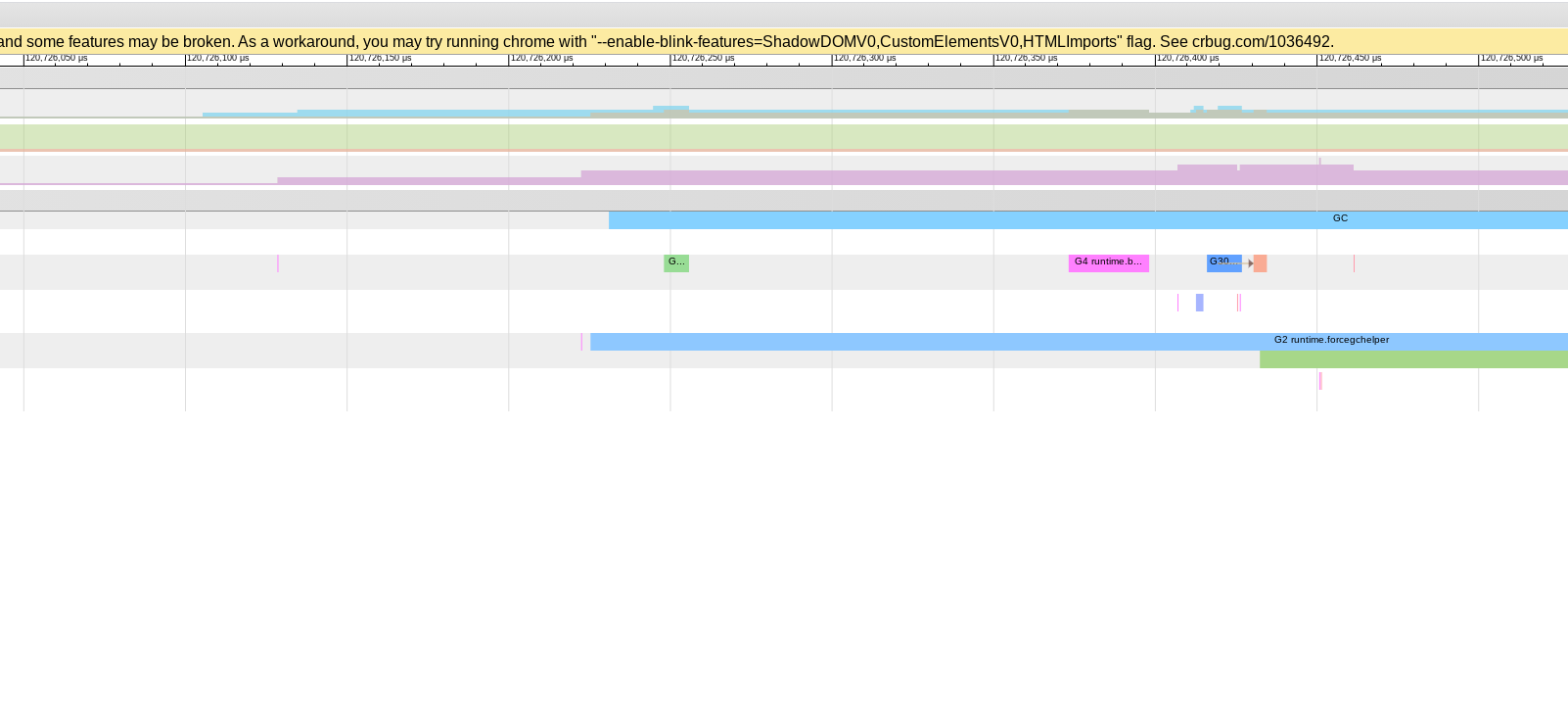

This issue is reminding me a bit of hard-to-reproduce issues we've been tracking with Two minutes later forcegchelper runs and lo and behold, the test starts making progress again (G30): Note necessarily related, but suspicious. |

|

Update for the day: I've narrowed down the issue to findrunnable finding a timer to wait on, deciding to netpollBreak to kick the poller... and then nothing. Either netpollBreak isn't waking the poller or there isn't actually a poller. Hopefully, I'll be able to narrow this further tomorrow. |

|

There is a poller, but it is not woken by The 180s netpoll should have woken from netpollBreak but did not. I see two primary possibilities:

I suppose I'll take a look at the kernel source tomorrow... |

|

Or (3) the event is edge-triggered and the kqueue call was slightly too late. Note that the netpollBreak write is very close in time to the netpoll call (which I'm logging before the call). |

|

@bsiegert are you aware of any recent issues with the NetBSD kernel that may cause the behavior described in #42515 (comment)? To add further details, all following calls to The source all seems OK to me:

This seems fine, but makes me wonder if there is some race where the condition variable isn't firing via a racing broadcast. The kqueue lock seems to prevent this, but who knows. |

|

Looking more closely at the write and kevent, I can get time uncertainty bounds [1] like: The write bound start is after the kqueue bound start, so the race is close enough that we can't really tell which came first, which is a bit disappointing. Though recall that based on the kevent API contract it shouldn't matter which came first regardless. The kevent should return the event. [1] That is, start time is some time before the syscall was made. end time is some time after the syscall returned. But we can't be sure exactly when the call occurred inbetween. |

|

I've attempted to reproduce this issue on my own NetBSD VM, based on the gomote build script https://cs.opensource.google/go/x/build/+/master:env/netbsd-386/. Unfortunately that script builds NetBSD 9.0_2019Q4, which seems to no longer exist and thus doesn't build. The closest I could try was 9.0_2020Q2 and 9.1. I've been unable to reproduce the problem on either of these versions. It is possible that something has changed since 9.0_2019Q2 to fix the issue, or perhaps my environment is simply different enough somehow to avoid triggering this issue. If it is the former, then perhaps the fix is just to update the builders (cc @golang/release), though I don't understand NetBSD's release policy well enough to know if 9.0_2019Q2 is a supported release that we want to keep working (cc @bsiegert). |

|

Change https://golang.org/cl/277033 mentions this issue: |

|

Ah, after many hours of waiting I did manage to reproduce this on i386 9.0_2020Q2. Interestingly, the behavior is similar but not identical: This can't be a race in setup in |

|

Change https://golang.org/cl/277332 mentions this issue: |

|

I've pushed a workaround discussed with @aclements that could mitigate this issue, but it's pretty nasty, so I'd still rather find the actual cause. |

|

I've finally managed to get an i386 VM running a custom NetBSD kernel that definitely fails at c98ec4120ecf0b9a29bf31c1b00d7896536b7d76 (original 9.0 commit) and seems to pass at f15eb9e6ad34c3315d354274fd26356e3ae79d84 (HEAD), so I'll try to do a bisect. |

|

I found the bug! First thing first: the bisect was a red herring. I didn't give HEAD enough time to reproduce the issue. It turns out this is still failing on trunk NetBSD. The issue is a race in kevent(2) after all. The bad flow is:

Step 3 is the problem here: once kq is unlocked another kevent call can come along and miss this entry altogether. If that is a blocking call and there are no entries left, then the call will even block and only wake when a completely new event occurs or timeout (i.e., step 4 doesn't notify the condvar [1]). To verify this theory, I've added tracepoints to kqueue_scan, which can be seen in prattmic/netbsd-src@2cd2ba9. The interesting part of the trace is below. Note that the first two columns are PID and TID, respectively. The format at the end of the line is I'll file a NetBSD bug once I figure out how. This bug does not seem to be new; as far as I can tell it dates back to the addition of kqueue locking (NetBSD/src@c743ad7). It is exposed by removal of sysmon as a backstop for overrun timers. Thus, I think http://golang.org/cl/277332 is still the best workaround [2]. It is unclear to me how difficult this is to fix, as it is not clear to me which, if any, constraints require unlocking the kq. For completeness, I took a look at two other BSDs to see if they are affected: OpenBSD: Has a Big Kernel Lock at syscall level, so no kevent locking: https://github.com/openbsd/src/blob/e20c779da119f998aba452410e439df1275cd7e8/sys/sys/syscall_mi.h#L92-L104 [1] N.B. putting a notification after step 4 would fix the problem for Go, but it would still be generally incorrect because calls that don't block could still return with missing events in violation of the API. |

|

Minimal C reproducer: https://gist.github.com/prattmic/8b5bc6c87437bd4496d5f546fb3226fc Pretty simple, just two threads concurrently polling the same kqueue. One of them usually misses an event within a few hundred iterations. Don't run this under ktrace with my kernel changes or the machine will panic! (OOM I guess?) |

|

Sent update to https://gnats.netbsd.org/cgi-bin/query-pr-single.pl?number=50094, turns out this issue was reported in 2015! Our strategy here should be to get http://golang.org/cl/277332 in and hopefully we can remove that one day when NetBSD is fixed. |

|

Awesome, thanks for the thorough investigation! I asked on the tech-kern@

mailing list to see if there can be any traction on this issue.

|

The netbsd kernel has a bug [1] that occassionally prevents netpoll from waking with netpollBreak, which could result in missing timers for an unbounded amount of time, as netpoll can't restart with a shorter delay when an earlier timer is added. Prior to CL 232298, sysmon could detect these overrun timers and manually start an M to run them. With this fallback gone, the bug actually prevents timer execution indefinitely. As a workaround, we add back sysmon detection only for netbsd. [1] https://gnats.netbsd.org/cgi-bin/query-pr-single.pl?number=50094 Updates #42515 Change-Id: I8391f5b9dabef03dd1d94c50b3b4b3bd4f889e66 Reviewed-on: https://go-review.googlesource.com/c/go/+/277332 Run-TryBot: Michael Pratt <mpratt@google.com> TryBot-Result: Go Bot <gobot@golang.org> Reviewed-by: Michael Knyszek <mknyszek@google.com> Reviewed-by: Austin Clements <austin@google.com> Trust: Michael Pratt <mpratt@google.com>

|

With http://golang.org/cl/277332, the immediate problem is solved. Further cleanup is blocked by upstream fix to https://gnats.netbsd.org/cgi-bin/query-pr-single.pl?number=50094, so I'll close this for for now. |

|

Upstream change NetBSD/src@7fb7f43 should address this issue. I haven't had a chance to test it, but according to Jaromir it passes the C repro I created (which was more reliable than Go anyways). |

|

The fix in NetBSD will be pulled up to release branch once the change is stabilizied. Eventually the sysmon conditional can be removed for good and refer the go users to use the latest NetBSD release. I'll create a ticket for go once that it possible. Removing the conditional would be highly preferable, as it's not at all good to be the only platform doing the timer kick from sysmon - not just due the extra latency only experienced on older NetBSD, but there might be some interference due to locking even with fixed kernel due to the timers being checked from sysmon. |

|

@prattmic great work on it! |

sys/kern/kern_event.c r1.110-1.115 (via patch) fix a race in kqueue_scan() - when multiple threads check the same kqueue, it could happen other thread seen empty kqueue while kevent was being checked for re-firing and re-queued make sure to keep retrying if there are outstanding kevents even if no kevent is found on first pass through the queue, and only kq_count when actually completely done with the kevent PR kern/50094 by Christof Meerwal Also fixes timer latency in Go, as reported in golang/go#42515 by Michael Pratt

sys/kern/kern_event.c r1.110-1.115 (via patch) fix a race in kqueue_scan() - when multiple threads check the same kqueue, it could happen other thread seen empty kqueue while kevent was being checked for re-firing and re-queued make sure to keep retrying if there are outstanding kevents even if no kevent is found on first pass through the queue, and only kq_count when actually completely done with the kevent PR kern/50094 by Christof Meerwal Also fixes timer latency in Go, as reported in golang/go#42515 by Michael Pratt

sys/kern/kern_event.c r1.110-1.115 (via patch) fix a race in kqueue_scan() - when multiple threads check the same kqueue, it could happen other thread seen empty kqueue while kevent was being checked for re-firing and re-queued make sure to keep retrying if there are outstanding kevents even if no kevent is found on first pass through the queue, and only kq_count when actually completely done with the kevent PR kern/50094 by Christof Meerwal Also fixes timer latency in Go, as reported in golang/go#42515 by Michael Pratt

|

Change https://golang.org/cl/324472 mentions this issue: |

Detect the NetBSD version in osinit and only enable the workaround for the kernel bug identified in #42515 for NetBSD versions older than 9.2. For #42515 For #46495 Change-Id: I808846c7f8e47e5f7cc0a2f869246f4bd90d8e22 Reviewed-on: https://go-review.googlesource.com/c/go/+/324472 Trust: Tobias Klauser <tobias.klauser@gmail.com> Trust: Benny Siegert <bsiegert@gmail.com> Run-TryBot: Tobias Klauser <tobias.klauser@gmail.com> Reviewed-by: Michael Pratt <mpratt@google.com> Reviewed-by: Ian Lance Taylor <iant@golang.org> TryBot-Result: Go Bot <gobot@golang.org>

sys/kern/kern_event.c r1.110-1.115 (via patch) fix a race in kqueue_scan() - when multiple threads check the same kqueue, it could happen other thread seen empty kqueue while kevent was being checked for re-firing and re-queued make sure to keep retrying if there are outstanding kevents even if no kevent is found on first pass through the queue, and only kq_count when actually completely done with the kevent PR kern/50094 by Christof Meerwal Also fixes timer latency in Go, as reported in golang/go#42515 by Michael Pratt

(Issue forked from #42237; see #42237 (comment).)

2020-11-11T06:26:05-f2e186b/netbsd-amd64-9_0

2020-11-10T18:42:47-8f2db14/netbsd-386-9_0

2020-11-10T18:33:37-b2ef159/netbsd-386-9_0

2020-11-10T18:33:37-b2ef159/netbsd-arm-bsiegert

2020-11-10T15:05:17-81322b9/netbsd-arm64-bsiegert

2020-11-09T21:03:36-d495712/netbsd-amd64-9_0

2020-11-09T20:02:56-5e18135/netbsd-386-9_0

2020-11-09T19:46:24-a2d0147/netbsd-386-9_0

2020-11-09T19:00:00-01cdd36/netbsd-386-9_0

2020-11-09T16:09:16-a444458/netbsd-386-9_0

2020-11-09T15:20:26-cb4df98/netbsd-386-9_0

2020-11-09T14:17:30-f858c22/netbsd-386-9_0

2020-11-07T16:59:55-5e371e0/netbsd-amd64-9_0

2020-11-07T16:31:02-2c80de7/netbsd-386-9_0

2020-11-07T03:19:27-33bc8ce/netbsd-386-9_0

2020-11-06T20:49:11-5736eb0/netbsd-amd64-9_0

2020-11-06T19:42:05-362d25f/netbsd-386-9_0

2020-11-06T15:33:23-d21af00/netbsd-386-9_0

2020-11-05T20:35:00-2822bae/netbsd-arm64-bsiegert

2020-11-05T16:46:56-04b5b4f/netbsd-386-9_0

2020-11-05T16:46:56-04b5b4f/netbsd-amd64-9_0

2020-11-05T15:16:57-34c0969/netbsd-386-9_0

2020-11-05T14:54:35-74ec40f/netbsd-386-9_0

2020-11-05T00:21:39-c018eec/netbsd-386-9_0

2020-11-04T21:45:25-fd841f6/netbsd-amd64-9_0

2020-11-04T16:54:48-594b4a3/netbsd-386-9_0

2020-11-04T15:53:19-5f0fca1/netbsd-386-9_0

2020-11-04T06:12:33-8eb846f/netbsd-amd64-9_0

2020-11-03T23:05:51-e1b305a/netbsd-386-9_0

2020-11-03T04:11:02-974def8/netbsd-386-9_0

2020-11-03T00:50:57-ebc1b8e/netbsd-amd64-9_0

2020-11-02T21:08:14-4fcb506/netbsd-386-9_0

2020-11-02T21:00:57-d1efaed/netbsd-arm64-bsiegert

2020-11-02T03:03:16-0387bed/netbsd-amd64-9_0

2020-11-01T13:23:48-0be8280/netbsd-arm64-bsiegert

2020-10-31T08:41:25-f14119b/netbsd-amd64-9_0

2020-10-31T00:35:18-79fb187/netbsd-arm64-bsiegert

2020-10-30T22:21:02-64a9a75/netbsd-arm-bsiegert

2020-10-30T21:14:09-f96b62b/netbsd-386-9_0

2020-10-30T21:14:09-f96b62b/netbsd-amd64-9_0

2020-10-30T21:02:17-420c68d/netbsd-386-9_0

2020-10-30T20:20:58-6abbfc1/netbsd-386-9_0

2020-10-30T18:06:13-6d087c8/netbsd-386-9_0

2020-10-30T18:05:53-36d412f/netbsd-amd64-9_0

2020-10-30T18:01:54-fb184a3/netbsd-amd64-9_0

2020-10-30T17:54:57-e02ab89/netbsd-amd64-9_0

2020-10-30T16:29:11-1af388f/netbsd-amd64-9_0

2020-10-30T16:20:05-2b9b272/netbsd-386-9_0

2020-10-30T16:20:05-2b9b272/netbsd-amd64-9_0

2020-10-30T00:23:50-faa4426/netbsd-386-9_0

2020-10-30T00:13:25-60f42ea/netbsd-386-9_0

2020-10-30T00:03:40-01efc9a/netbsd-386-9_0

2020-10-29T22:45:29-f588974/netbsd-amd64-9_0

2020-10-29T22:44:49-f43e012/netbsd-386-9_0

2020-10-29T21:46:54-fe70a3a/netbsd-386-9_0

2020-10-29T18:26:42-0b798c4/netbsd-amd64-9_0

2020-10-29T15:27:22-68e30af/netbsd-386-9_0

2020-10-29T15:13:09-50af50d/netbsd-amd64-9_0

2020-10-29T15:11:47-ecb79e8/netbsd-386-9_0

2020-10-29T13:53:33-c45d780/netbsd-amd64-9_0

2020-10-29T08:08:26-d9725f5/netbsd-386-9_0

2020-10-29T08:00:50-8b51798/netbsd-386-9_0

2020-10-29T03:23:51-15f01d6/netbsd-386-9_0

2020-10-29T01:50:09-308ec22/netbsd-386-9_0

2020-10-29T00:07:35-c1afbf6/netbsd-386-9_0

2020-10-28T17:54:13-fc116b6/netbsd-amd64-9_0

2020-10-28T17:10:08-642329f/netbsd-386-9_0

2020-10-28T17:08:06-e3c58bb/netbsd-amd64-9_0

2020-10-28T16:17:54-421d4e7/netbsd-386-9_0

2020-10-28T14:25:56-b85c2dd/netbsd-amd64-9_0

2020-10-28T13:25:44-72dec90/netbsd-386-9_0

2020-10-28T05:02:44-150d244/netbsd-386-9_0

2020-10-28T04:20:39-02335cf/netbsd-amd64-9_0

2020-10-28T01:03:23-368c401/netbsd-386-9_0

2020-10-27T23:12:41-5d3666e/netbsd-386-9_0

2020-10-27T22:13:30-091257d/netbsd-arm-bsiegert

2020-10-27T21:29:13-009d714/netbsd-amd64-9_0

2020-10-27T21:28:53-933721b/netbsd-amd64-9_0

2020-10-27T20:22:56-9113d8c/netbsd-amd64-9_0

2020-10-27T20:04:19-de2d1c3/netbsd-amd64-9_0

2020-10-27T20:03:41-5c1122b/netbsd-amd64-9_0

2020-10-27T20:03:12-79a3482/netbsd-386-9_0

2020-10-27T19:52:40-c515852/netbsd-amd64-9_0

2020-10-27T18:38:48-f0c9ae5/netbsd-amd64-9_0

2020-10-27T18:38:48-f0c9ae5/netbsd-arm64-bsiegert

2020-10-27T18:13:59-3f6b1a0/netbsd-amd64-9_0

The text was updated successfully, but these errors were encountered: