New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

runtime: high CPU usage with high timer adjustments #41699

Comments

|

Thanks for the test case. I wrote https://golang.org/cl/258303, which helps with that test case. It would be interesting to know whether it helps with your real code. Thanks. |

|

Change https://golang.org/cl/258303 mentions this issue: |

|

@ianlancetaylor Unfortunately that did not help much. CPU usage is still at 95-100%, but there's no It's certainly lower than without the CL, but not nearly as good as on Go 1.13.15. I'd add that this issue is no longer a problem for us since we've re-implemented our idling logic for IMAP so that it no longer involves moving connections deadlines to a sooner point. These pprof results are provided for an older version of our worker application specifically deployed for testing out the CL. But still there might be valid cases for moving deadlines further, so I'd not close this. |

|

@dobegor Thanks for testing it. Unfortunately that profile doesn't tell me anything useful. If you are interested in pursuing this, it would be very helpful to have a sample program that still shows the CPU regression. I do not see the CPU regression in the sample program that you already provided. That leads me to believe that there must be some relevant difference in your real application, but I don't know what it might be. |

|

We are experiencing the exact same issue. Upgrading from 1.13 to 1.15 makes our software (a HTTP reverse proxy) taking 40% more CPU with runtime.adjusttimers being the top function. When testing your patch, it does not change the CPU consumption, but the top function becomes runtime.TimeSleepUntil instead. |

|

@rs Thanks for the info. I've updated https://golang.org/cl/258303. Would it be possible for you to give it another try? Thanks. |

|

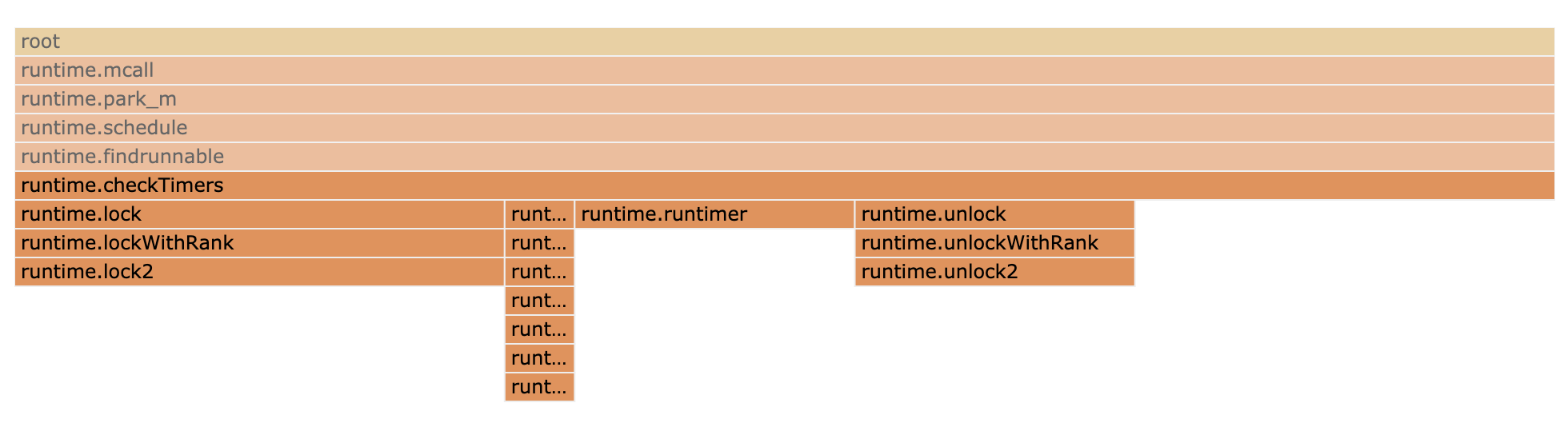

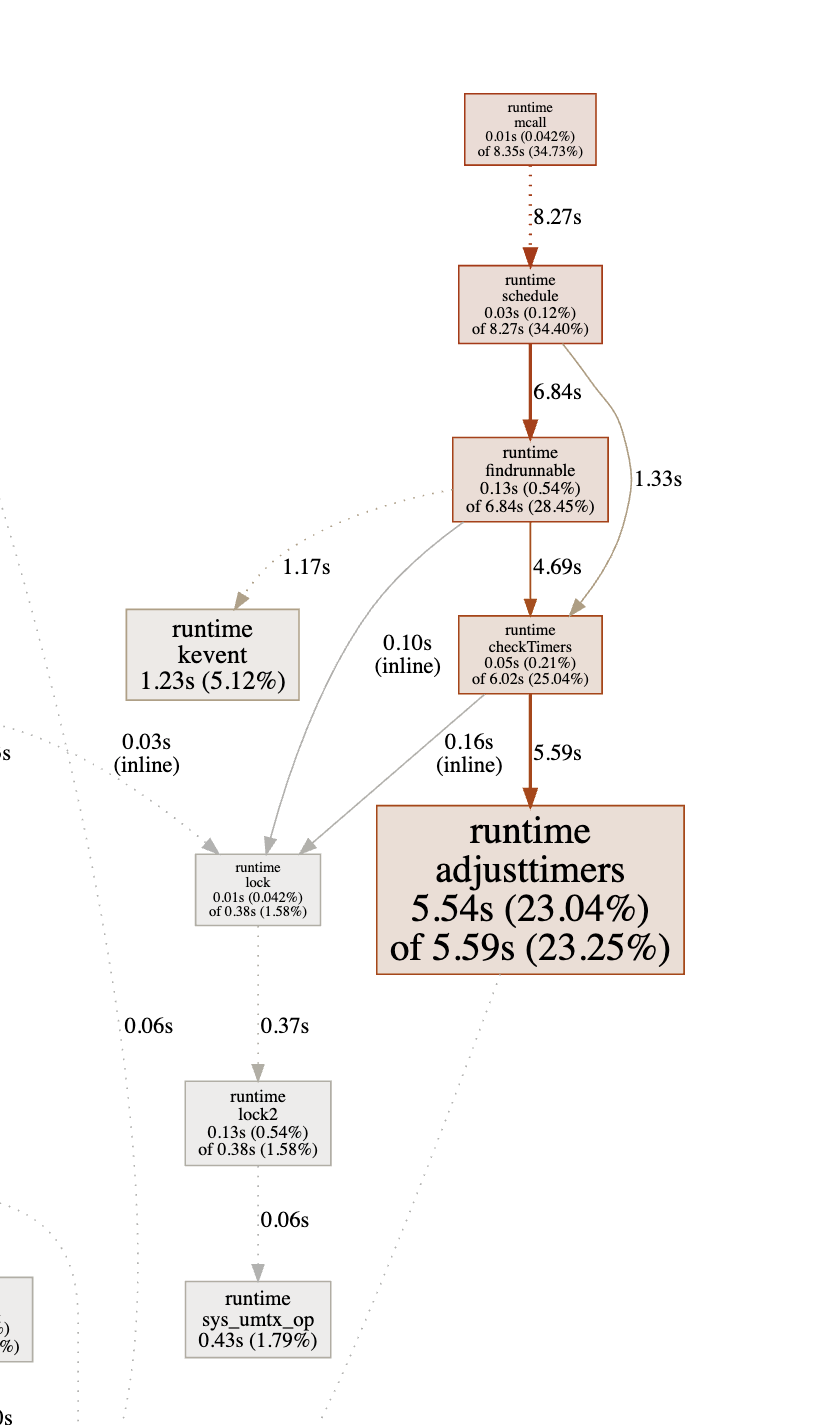

With this patch, the delta is down to 10% increase instead of 40%. Nothing really stand out anymore in the top. The next "higher than before" function usage are runtime.scanobject and runtime.lock2 (see screenshot): If I compare flamegraphs, runtime.checkTimer seems to be the one adding 3% on the selected pair (top is control, bottom is canary): Here is a zoom on runtime.checkTimer: |

|

Thanks for the detailed examination. I've adjusted checkTimers to take advantage of the new field. It might be worth trying https://golang.org/cl/258303 again, at your convenience. |

|

We are down to an average of 4% CPU increase with still up to 10-20% on some canary pairs. |

|

@rs Thanks. In the absence of a test case I think I'm going to leave it there. We know that the new timer code is significantly better for a good range of real code, it's OK if it's a little worse for some code. @dobegor I would still very much like to hear whether https://golang.org/cl/258303 helps at all with your real code. Thanks. |

|

@ianlancetaylor For us, 4% is still pretty high and costly given our scale. The code we are running is not particularly special, it's basically a simple reverse proxy built on top of net/http and x/net/http2. I'm surprised this issue does not seem hit more people. What do you suggest to make our code less impacted by this issue? We do not update timers and we aren't using so much of them except a deadline context to expire client requests which is almost never reached. |

|

@rs I agree that 4% is higher than I would like, but there is only so much I can do without a test case. I'm essentially guessing. So what I suggest is: if at all possible, give me a test case that I can run to see the results that you are seeing. Thanks. |

Will do that this week and get back to you with the results. |

Ok I'll try to come up with a test case. |

|

@ianlancetaylor: I can't find a way to isolate the issue. With all my synthetic tests I've done trying to simulate what we do in production, the more I put timers, the better Go 1.15 behave compared to 1.13 (as you would expect). We don't have an unreasonable use of timers anyway, the only timer we have in the critical path is a context deadline for each client request. Is there any info I could provide that would help you understand what's going on? |

|

@rs I suppose updated profiles with the most recent version of the CL might help. Basically: where is the time going? Also, what can you tell me about how your application uses timers? For that matter, are we sure that timers are the reason for the slowdown from Go 1.13 to Go 1.15? There were, of course, other changes. @dobegor Have you had any chance to try the later version of https://golang.org/cl/258303? Thanks. |

|

@ianlancetaylor here it is Edit: fixed the control (wasn't the right code version) |

|

Thanks. I don't see anything obviously timer related there. The only possible timer related change I see there is a possible slowdown in |

|

I just edited the comment, the control wasn't right. Sorry about that. |

|

I suppose I still don't see anything obviously timer related. |

|

@ianlancetaylor I'm sorry for delaying the testing of the CL on our use case. I will post the test results asap as I've just got back from sick leave, this covid thing is no joke. |

|

Sorry to hear that, feel better soon. No rush on testing, I went ahead and submitted the change anyhow. It should be an improvement even if it doesn't fix the whole problem. |

|

Hey @ianlancetaylor I'm following up on @rs report as we are still experiencing this with Go 1.15.7. My initial digging showed a large CPU used by The graph below shows canary results:

A better view of some of our busiest sites show the trend, with large CPU increase from Go 1.15, helped somewhat by sysmon removal: From the profiler the main difference we now see is Thank you for any pointer! We're stuck on Go 1.13 :( |

|

@agirbal could you test with Go 1.16 as well (rc1 should be released today!)? 1.16 contains http://golang.org/cl/258303, which is specifically intended to reduce time in adjusttimers. Also, could you clarify which case the profile containing adjusttimers is on? Is that 1.15 with the sysmon frequency reduced, or baseline 1.15? |

|

@prattmic ok I just tried 1.16rc1 and the issue seems gone! That is amazing news, we can get back on top of our upgrades, thank you. In graph below:

I think for our purposes the <1% increase is acceptable and we won't have much time digging further. The sysmon part is interesting though, maybe we can discuss at #18236 |

|

I can confirm that using 1.16rc1 with the last version of CL 258303 did not show any measurable performance hit compared to Go 1.13.15 baseline on our production workloads. |

|

Great! I think we are done here, and agreed that sysmon discussion can go on #18236. |

What version of Go are you using (

go version)?Does this issue reproduce with the latest release?

Yes. This performance regression reproduces only on Go 1.14+. Go 1.13.15 is unaffected.

What operating system and processor architecture are you using (

go env)?go envOutputWhat did you do?

Running on 8-core Google Cloud machine.

What did you expect to see?

Same or less CPU usage on Go 1.15.2 as on Go 1.13.15

What did you see instead?

~70% CPU usage on Go 1.13.15 and 100% CPU usage on Go 1.15.2.

Actual story of this issue

We have a "worker" application that connects to email accounts via IMAP and listens them. There's one goroutine per connection, total ~160k connections per worker node (4-core machine on GCP).

Sometimes, connection's deadline is being moved (

conn.SetReadDeadline) to a sooner point than was previously set. This is a requirement due to application logic. This causesruntime.adjusttimersto do a lot of work.For example, here is the pprof profile with production workload: https://gist.github.com/dobegor/80134e0d4d997cf03fa8f429da72d753

Upgrading from Go 1.13 to 1.14/1.15.2 resulted in change ~80% CPU usage to 100% CPU usage and huge performance degradation of business metrics. We were unable to move from Go 1.13 because it actually takes a lot more resources to run the same code until we implemented some workarounds to not move deadline to a sooner point.

/cc @ianlancetaylor @ChrisHines -- awesome thanks for reaching out to help and find the root cause of this issue.

The text was updated successfully, but these errors were encountered: