-

Notifications

You must be signed in to change notification settings - Fork 17.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

runtime: gc exhausts all cpu causing a short delay #33927

Comments

|

inuse_objects: File: comet |

|

I have adjusted GOGC, GOMAXPROCS, but this doesn't work, the number of inuse objects is what I really need. |

|

usually: Runqueue will rise in gc and then come down after gc is completed |

|

gc log: |

|

The first thing to do is to see if you can reduce the amount of memory you are allocating. The memory profile should be able to help you there. The GC is expected to use up to 25% of your CPU resources, and it sounds like that is what you are seeing. Is there anything running on your machine other than your Go program? Why do you have so many runnable goroutines? |

maybe not, the number of inuse objects is what I really need. This is already the object occupancy after my optimization.

maybe this 25% is Represents 25% of procs, It seems that even is not the cpu time slice ? It seems that that moment took up more than 95% of cpu resources.

No, when the gc occur, the cpu will boost over 95%

It is my tcp server , There are about 800 000 established long tcp connections, and there will be non-stop users coming in through tcp connection, about 5,000 per second, and some users (connections) will be disconnected at the same time. |

|

@ianlancetaylor Is there a relevant source code here that allows me to modify the threshold by 25% in runtime source code? |

|

gc pacer: pacer: assist ratio=+4.083553e+000 (scan 4177 MB in 6635->7146 MB) workers=12++0.000000e+000 |

|

I found const gcBackgroundUtilization and dedicatedMarkWorkersNeeded and are trying to adjust it. |

|

I adjusted the proportion of gc cpu and the number of work, but this does not work. What I don't understand is why the CPU usage is usually low, and gc will always make my CPU grow up, even more than 95%, and then my service will be delayed. |

|

From what I’m seeing the program is using 17GB out of an available 96GB of

RAM. Overall the GC is using 8% of the CPU. The GC runs 3 or 4 times a

minute for 2 or 3 seconds. Losing CPU to the GC affects the application.

When the GC thinks there is an idle P it puts it to work doing GC work. The

GC isn’t free and pacer seems to be limiting dedicated CPU to 25% as it was

designed for.

It is unclear what the applications service level objective (SLO) is beyond

faster better cheaper so perhaps the rest of this message does not address

the objectives. If however the SLO is expressed as a 90th or 99th

percentile and not a maximum then it may help.

There seems to be plenty of unused RAM. What happens when the application

doubles GOGC, say GOGC=200. The issue indicated GOGC was varied and nothing

happens. This is surprising, at least to me. The program should expect RAM

usage to increase as heap size increases from 6GB to 9GB. It should also

expect the frequency of the GC to drop which should reduce the 8% by half

since the GC runs half as often. While this does not address the fact that

the GC periodically uses the CPU it should reduce the time the application

has reduced access to the CPUs. Since going from 6G to 9GB is modest given

96 GB of RAM. GOGC can be doubled a couple more times or until the overall

frequency of GC interruptions is minimal and the application doesn't OOM.

While this doesn’t address what happens when the GC is running it should

reduce overall impact perhaps meet the SLO.

…On Fri, Aug 30, 2019 at 3:09 AM Ant.eoy ***@***.***> wrote:

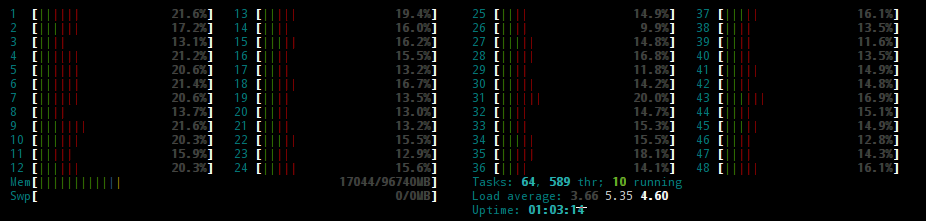

top : use gc set GOGC=100

bottom: close gc GOGC=-1

[image: image]

<https://user-images.githubusercontent.com/17495446/64000362-26bc6a00-cb38-11e9-840e-5b4c0f1620be.png>

—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

<#33927?email_source=notifications&email_token=AAHNNH5JWYVWWT2FATAIWTDQHDBUHA5CNFSM4IR4LA22YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOD5QYYKQ#issuecomment-526486570>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AAHNNH6PFDXS3MXJD6Q52YDQHDBUHANCNFSM4IR4LA2Q>

.

|

|

@RLH Thanks for you advise. But I have tried modifying GOGC=200,300,500 or 50, 80 before, and also modified GOMAXPROCS and combined them. Later I even modified source code for runtime const gcBackgroundUtilization and dedicatedMarkWorkersNeeded. as you said modifying the GOGC env, but this does not solve my problem. The problem with me is that sporadic gc will cause the cpu occupancy rate to reach 95% or more, which will cause my other code, such as proto.marshal, net.dial, base64 decode or lock, to cause a delay of more than 2 seconds or more. Is there any other thing I can offer? |

|

Sorry if I wasn't clear. Increasing GOGC, to say 800 or higher, does not

address the root issue, all it will do is reduce the frequency of the

problem from 2 or 3 times a minute to once every other minute. It is a

partial work _around_, nothing more.

…On Fri, Aug 30, 2019 at 12:30 PM Ant.eoy ***@***.***> wrote:

@RLH <https://github.com/RLH> Thanks for you advise. But I have tried

modifying GOGC=200,300,500 or 50, 80 before, and also modified GOMAXPROCS

and combined them. Later I even modified source code for runtime const

gcBackgroundUtilization and dedicatedMarkWorkersNeeded. as you said

modifying the GOGC env, but this does not solve my problem.

The problem with me is that sporadic gc will cause the cpu occupancy rate

to reach 95% or more, which will cause my other code, such as

proto.marshal, net.dial, base64 decode or lock, to cause a delay of more

than 2 seconds and more.

Is there anything I can offer?

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#33927?email_source=notifications&email_token=AAHNNH4BMUXP37N37ZPKBADQHFDJFA5CNFSM4IR4LA22YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOD5SENXY#issuecomment-526665439>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AAHNNH6ZV5F2N2SSDSPQFVDQHFDJFANCNFSM4IR4LA2Q>

.

|

What version of Go are you using (

go version)?Does this issue reproduce with the latest release?

yes

What operating system and processor architecture are you using (

go env)?go envOutputeach linux online server:

cpu: 48c

memory: 96g

Linux ll-025065048-FWWG.AppPZFW.prod.bj1 2.6.32-642.el6.x86_64 #1 SMP Tue May 10 17:27:01 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

What did you do?

My tcp long connection server , Deploy a go program per machine. each go program deal about 800 000 user.

Usually my cpu utilization is only about 30%,But gc will burst to over 95%. This causes my application to occasionally generate traffic delay jitter during gc. There will probably be some processing time of 2-3 seconds or more (including json.marshal,net.dial, metux lock, base64 decode and any others).

I observed the gc log and the runtime sched log. The conclusion is that the gc concurrent scan takes up a lot of cpu and knows that my business has no remaining cpu available. I observed that about a quarter of the procs are used for gc, no allocation proc for other tasks. I don't understand why gc cost lots of my cpu so that my business has no cpu resources available for a while, so my business has delayed jitter. Is there any solution or whether it can limit the numbers of cpu?

What did you expect to see?

Gc will not cause my business to delay

What did you see instead?

Gc caused my business to delay

The text was updated successfully, but these errors were encountered: