New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

net: different memory consumption on different VMs with net.Listen #32709

Comments

|

Does the memory consumption of the Go process differ, or are your two different kernels or virtualization mechanisms just behaving differently? |

emmmm , maybe , because i have tried to use Java , and it still get same result : but when i use python to do this , it doesn't appear the same result. and one thing i should declare that : |

Please investigate further and tell us exactly what is happening, along with the code that you are using to test. Please report the exact RSS and VIRT numbers for the Go process in both systems. Also check your syslogs and any other places for logs. In short, we want to know whether this is a Go issue or something to do with the underlying OS/virtualization layer. |

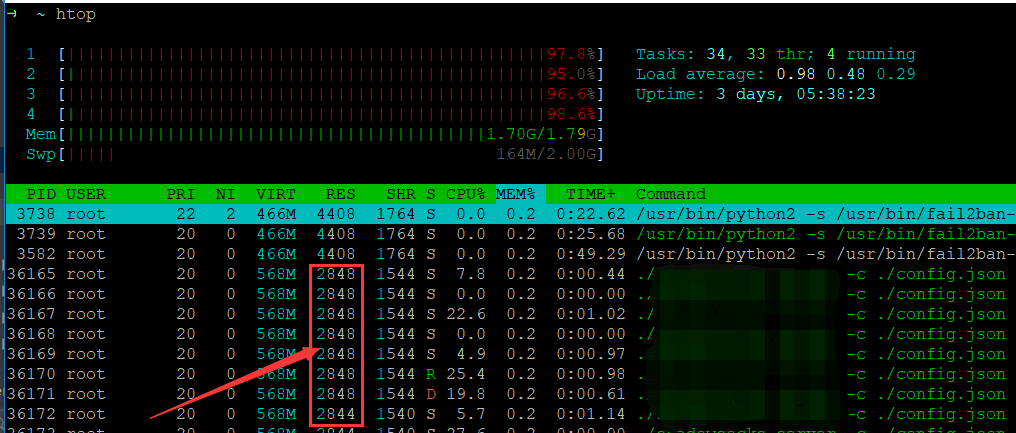

@agnivade Thans for your reply, here are some test from my own : the code about listen tcp ports is just like this below I tried to use golang listen 5000 tcp ports on the hyper-v at the first time , cpu and memory grows very quickly , then , the second time , i tried to use golang listen 3000 tcp ports on hyper-v ,here is result fom htop command here is the result from /proc/meminfo and the third time , i tried to use python to listen 5000 tcp ports on hyper-v python open tcp ports very slowly ,it cost about one mintue to finish but i should say golang is much quicker than python when listen tcp port |

|

Couple of follow-up questions-

|

|

as i mentioned before

i think memory has been consumed by Kernel, not the process that belong to Go when use golang , it cost about 1.6GB : when use python , it cost about 400M they are both test in hyper-v. but when i use kvm , it does not make so much difference in memory between python and golang |

|

@agnivade @rsc @bradfitz @katiehockman @@mikioh here are some results from cat /proc/vmallocinfo | grep reqsk_queue_alloc Each line represents that a port has been bind by system here are some tests , and they are both test in hyper-v when i test golang , will also show me 5000 lines : but as you can see above , golang has used 270336 for each port while python only use 24576 ! i have google a little for reqsk_queue_alloc |

|

In the kernel, it allocates proportional to int reqsk_queue_alloc(struct request_sock_queue *queue,

unsigned int nr_table_entries)

{

size_t lopt_size = sizeof(struct listen_sock);

struct listen_sock *lopt = NULL;

nr_table_entries = min_t(u32, nr_table_entries, sysctl_max_syn_backlog);

nr_table_entries = max_t(u32, nr_table_entries, 8);

nr_table_entries = roundup_pow_of_two(nr_table_entries + 1);

lopt_size += nr_table_entries * sizeof(struct request_sock *);

if (lopt_size <= (PAGE_SIZE << PAGE_ALLOC_COSTLY_ORDER))

lopt = kzalloc(lopt_size, GFP_KERNEL |

__GFP_NOWARN |

__GFP_NORETRY);

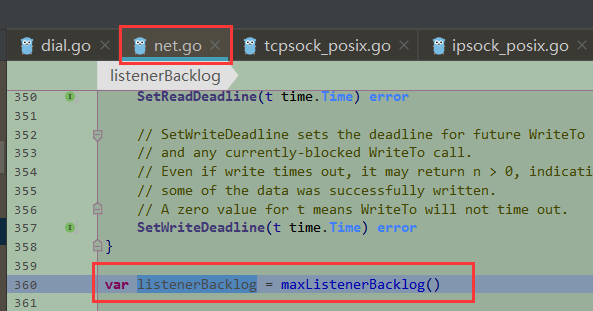

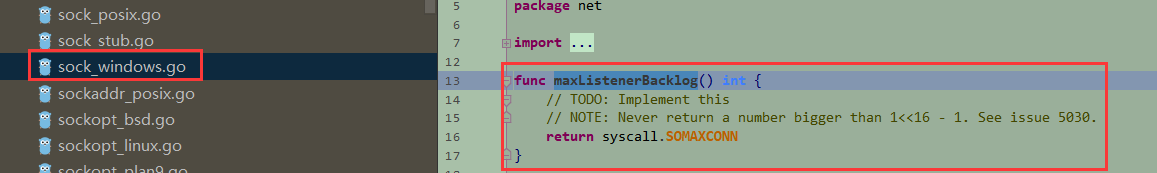

...So I suspect Python and Go are using different Go uses case syscall.SOCK_STREAM, syscall.SOCK_SEQPACKET:

if err := fd.listenStream(laddr, listenerBacklog(), ctrlFn); err != nil {

fd.Close()

return nil, err

}

return fd, nilAs for why the allocation is different under VMware vs DigitalOcean... perhaps it's using a different memory allocator? Or perhaps |

|

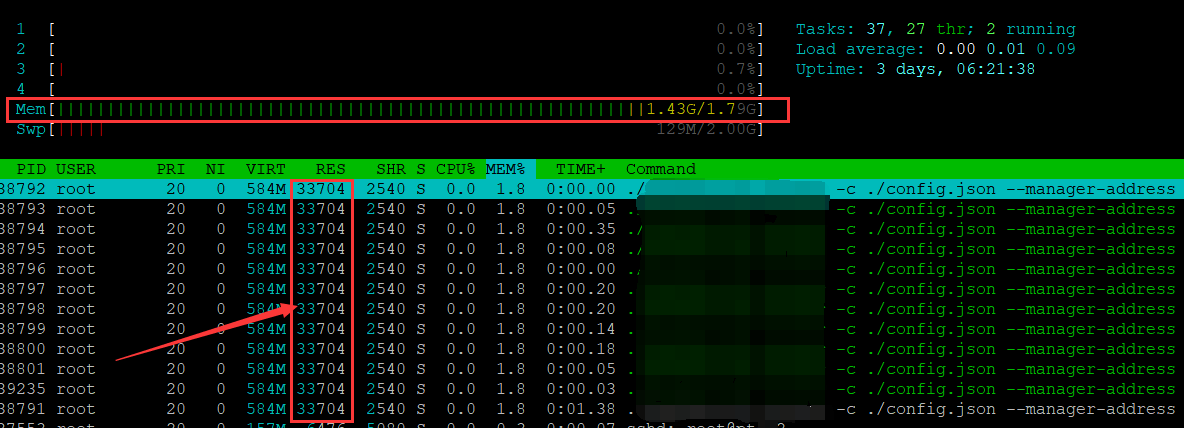

this time i have got two different vm , the vm belong to japan has same situation as i test in hyper-v even though it was kvm,memory increase very fast , finally system has kill that go process here is the result from cat /proc/vmallocinfo | grep reqsk_queue_alloc as you can see ,the same result to hyper-v another vm belong to DigitalOcean , the memory is normal , According to this, i downgrade DigitalOcean‘s kernel version to so ,It has nothing to do with virtualization layer(kvm or hyber-v) and kernel version** |

@bradfitz /proc/sys/net/core/somaxconn in DigitalOcean is 128 while Japan IDC is 32768 but i think set a bigger number maybe get better performance for queue to control tcp connection ps: python project has set backlog in the code , value is 1024 , not from system default |

|

@bradfitz i have not found yet |

|

Thanks for your reply , i have checked source code According to the source code , Go under linux environment will use /proc/sys/net/core/somaxconn as default unless some exceptions occurs , then go will choose to use syscall.SOMAXCONN, Or maybe you mean use syscall.listen() directly rather than use net.Listen("tcp", ":"+port) ? |

Correct. Please let us know if there is anything else to be done from our side on this. |

Thanks for your reply , i think someone has already discussed this at issue 9661 Although ListenConfig has been added in Go since 1.11.1 to customize socket option ,but when init socket , it still call @bradfitz i would appreciate if golang can make a easier way to achieve this in the future,because lower the kernel backlog value is not a good way as default for go project and it may affect all processes on the system. |

|

Closing old issues that still have the WaitingForInfo label where enough details to investigate weren't provided. Feel free to leave a comment with more details and we can reopen. |

What version of Go are you using (

go version)?1.11.1

Does this issue reproduce with the latest release?

yes,still reproduce in 1.12.6

What operating system and processor architecture are you using (

go env)?centos 7

What did you do?

i use for loop to listen about 5000 ports , and i have tried this in different virtual machines,

they are both belong to centos7

net.Listen("tcp", ":"+port)here is

uname -aresult belowone is in DigitalOcean (https://cloud.digitalocean.com),

Linux 3.10.0-957.12.2.el7.x86_64 #1 SMP x86_64 GNU/Linuxand another created by vmware in my own computer

Linux . 3.10.0-229.1.2.el7.x86_64 #1 SMP x86_64 GNU/Linuxthe vm in DigitalOcean consumes about 30M mermory together,

and vm in vmware consumes more than 2GB mermory together,

why could this happen ?

The text was updated successfully, but these errors were encountered: