New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

x/tools/gopls: fails on standard library outside of $GOROOT #32173

Comments

|

I have the same problem with vim+ale+gopls on OSX. I can not go to reference and completion in the standard library when jumped into a standard library file from my project. |

|

What is the output of |

|

@stamblerre FYI |

|

Do you have the standard library checked out anywhere else or are you jumping to the definitions under |

|

I'm not sure where I am.

|

|

Hm, then your issue is different from this bug. Are you able to share any |

|

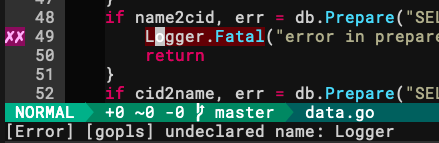

I can run go build in my project, but gopls still reporting error |

I have set -logfile /Users/arthur/gopls.log but nothing printed. let g:ale_go_gopls_options='-logfile=/Users/arthur/gopls.log' |

|

I'm on the latest master branch. |

|

I run

but these files are in the same package. |

|

And I have cleaned go build cache, but nothing happened. |

|

same for internal package. |

Can you confirm this by sharing the output of

Can you also try adding the The errors you are seeing are very strange. Have you set v.session.log.Debugf(ctx, "pkg %s, files: %v, errors: %v", pkg.PkgPath, pkg.CompiledGoFiles, pkg.Errors)to line 103 of |

|

I rebuild the gopls, and got log output. then I tried |

|

@stamblerre ping |

|

@arthurkiller: Your issue seems completely different from the original one filed above. Do you mind filing a separate issue with your log output? |

|

NVM, but I didn't clear what goes wrong in this issue. |

|

@arthurkiller: I just took another look at your log output. It seems that you are using modules, but you are in a directory outside of your |

|

Ah, I see you are actually in your |

|

k, I will open up another issue. thx alot |

|

Investigated this further and added filed #33548 as a follow-up to this issue. Closing. |

|

Awesome, now I can use gopls again! Really fast. happy hacking again. lol |

Forked from microsoft/vscode-go#2511.

This only happens if you clone the Go project into a second place outside of your

$GOROOT.The text was updated successfully, but these errors were encountered: