New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

proposal: archive/zip: NewZipWriter should support Ahead-of-time CRC and Sizes #23301

Comments

|

@rsc - A variation on this idea (that works for my use case) is found at https://github.com/hidez8891/zip/ Rather than the implemented modification of CreateHeader/Create, I'd propose a new method (or method pair) that allows non-streaming creation of file entries - leaving the default behaviour as-is, but allowing the new use case. |

|

Any functionality that allows the user to preset the size seems brittle. There are 3 pieces of information that needs to be known ahead-of-time: CRC32, compressed size, and uncompressed size. The compressed size is not known by the user and is dependent on the underlying compression implementation used. The only API that seems to make sense to me is: func (*Writer) AddFile(fh *Header, b []byte) error While I understand your use-case, it doesn't seem like this issue is prevalent enough to warrant new API being added. |

|

@steve-gray which old software are you aware of (and which version) that doesn't support content descriptors? |

|

It doesn't have to be ahead of time, we just have to seek back earlier in the file and write it out, right? So maybe we could do this transparently if the underlying writer allows seeking? |

|

Yes and no. If the size was less than 4GB, then it's easy to seek back and re-write the fixed-width 4B fields. However, if the file written was large enough and had to upgrade to ZIP64, it would not be able to go back and write the local headers for ZIP64. That being said, if the purpose of all this is to support old readers, then I doubt an old reader would understand ZIP64 anyways. Secondly, as a user, I would be surprised if the output format differed depending on whether the underlying writer was an EDIT: We could always emit a ZIP64 record conservatively. This leads to an increase of 28B for every file. Old readers that don't support ZIP64 should still ignore the ZIP64 extra data. |

|

@rasky's question is still unanswered: what programs care about this? It may just not be worth doing. |

|

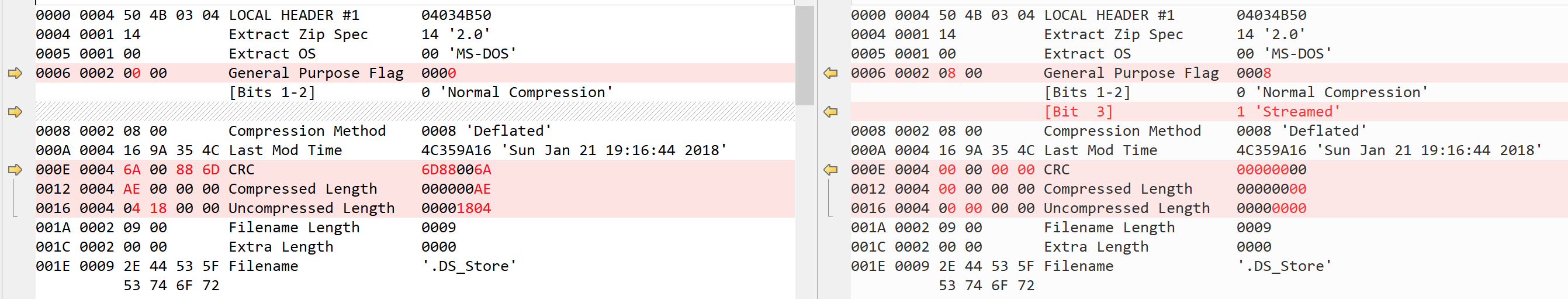

@rsc I'm try to upload a zip file into google chome webstore (https://chrome.google.com/webstore/developer/dashboard) but it indicate that the file is not valid. After fixing it with Generated files: File debugging: Difference between I hope this can help |

|

following, I have the same issue |

|

@genez you mean you are using Google Chrome Webstore? Maybe this should be a bug for Google Chrome Webstore instead. What Go generates is a valid zip file already. |

|

@rsc yes, I could submit a bug for Google Chrome Webstore. I would see this as an improvement rather than a bug What's your opinion? |

|

The whole zip.Writer interface and design is predicated on having an io.Writer and generating the output in one pass. And the zip file format is designed to make that possible. It's far from clear that we need to take on the complexity of two passes just for Google Chrome Webstore. Even knowing about Are there any other reasons we should do this? |

|

An additional operation to add a file from a buffer would:

1. Only represent a slight growth to API (1 operation)

2. Allow existing code to work as-was, without impact or output changes.

3. Allow users impacted by this issue to have a path to support their scenarios.

4. Preserves the single-pass write style.

It seems like a good compromise - and the read side needs to be able to parse these anyway for files produced, so it’s not like we need to do work in that area.

Get Outlook for iOS<https://aka.ms/o0ukef>

…________________________________

From: Russ Cox <notifications@github.com>

Sent: Tuesday, February 6, 2018 7:19:50 AM

To: golang/go

Cc: Steve Gray; Mention

Subject: Re: [golang/go] proposal: archive/zip: NewZipWriter should support Ahead-of-time CRC and Sizes (#23301)

The whole zip.Writer interface and design is predicated on having an io.Writer and generating the output in one pass. And the zip file format is designed to make that possible. It's far from clear that we need to take on the complexity of two passes just for Google Chrome Webstore. Even knowing about zip -F is maybe enough.

Are there any other reasons we should do this?

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub<#23301 (comment)>, or mute the thread<https://github.com/notifications/unsubscribe-auth/AN45v_Waqe-tk6MdLMNVR3uJtDsnD3hfks5tR3B2gaJpZM4RQadS>.

|

|

The complexity being added is only to support single-pass readers (which Chrome seems to be one). The more I think about it, the more I believe supporting such a use-case is actually a mistake. There is nothing in the specification that forbids a valid file to be written, but omitted from the trailing central-directory. In fact, you can "remove" and add files to an existing ZIP file by concatenating data to the end and writing an entirely new central directory that references the newly added files and ignores the "removed" ones. When reading in a streaming manner, it is impossible for a reader to determine whether the file really exists or was "removed" until it hits the directory. Fundamentally, the ZIP format is not streaming read friendly. Giving users the illusion that it is the case, is misleading in my opinion. If we're going to add complexity, #15626 is more in line with actual properties that ZIP guarantees. |

|

Per @dsnet's comment, I think we should decline to do this. |

Golang's archive/zip does not support pre-calculation of the file sizes and CRC statistics used in the PKZIP file header structure, requiring use of the 'Content Descriptor' general purpose flag and appending a structure after each included file. This flag is not compatible with various older software, precluding archive/zip from being used in some application scenarios.

What version of Go are you using (

go version)?1.9

Does this issue reproduce with the latest release?

Yes

What operating system and processor architecture are you using (

go env)?OSX High Sierra (Intel x64)

What did you do?

What did you expect to see?

What did you see instead?

The text was updated successfully, but these errors were encountered: