-

Notifications

You must be signed in to change notification settings - Fork 18k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

runtime: Windows 2008: all goroutines are asleep - deadlock #22394

Comments

|

I just noticed that your using Cygwin. Sadly Go has never supported running inside Cygwin. Is it possible for you to test outside the Cygwin environment?

… On 23 Oct 2017, at 22:05, Felix Geisendörfer ***@***.***> wrote:

I already reported this issue in #20362 (which might be the same or related), but am opening a new issue as requested by @davecheney .

What version of Go are you using (go version)?

go version go1.9.1 linux/amd64

Does this issue reproduce with the latest release?

Yes.

What operating system and processor architecture are you using (go env)?

GOARCH="amd64"

GOBIN=""

GOEXE=".exe"

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOOS="windows"

GOPATH="/dn"

GORACE=""

GOROOT="/usr/local/go19"

GOTOOLDIR="/usr/local/go19/pkg/tool/linux_amd64"

GCCGO="gccgo"

CC="gcc"

GOGCCFLAGS="-m64 -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build141570660=/tmp/go-build -gno-record-gcc-switches"

CXX="g++"

CGO_ENABLED="0"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

What did you do?

I was able to reproduce the deadlock with a minimal test program that basically just executes a http GET request.

I ran the command in a loop and got the following results:

go1.7.5: No deadlocks

go1.8.4: No deadlocks (This means that the e4371fb aka go1.8beta2-389-ge4371fb179 commit mentioned above is probably not the root cause)

go1.9.1: Lots of deadlocks, see example

Notes:

I was able to consistently reproduce this in 64Bit Win7 VM with Cygwin. However, it doesn't happen for every run of the program, but 20-50% of the runs trigger the problem.

I was not able to reproduce this in a local 64Bit Win7 Professional VM (also using Cygwin)

What did you expect to see?

No deadlock.

What did you see instead?

https://gist.github.com/felixge/a2efcaf098e1b8fa0ec1f7d67fb2481c

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

|

|

@davecheney it's a bit tricky, because this machine is in a factory in china and I don't have physical access to it. But I'll try to have somebody on-site execute the example program above using cmd.exe as soon as possible. That being said, I'm curious, how is executing a Go program under cygwin different from executing it in cmd.exe? The Go program is compiled under Linux (not in cygwin). The only reason we're using cygwin is because it's easier for us to manage machines running sshd under cygwin, than managing windows machines directly. Edit: Not sure if it counts, but I just launched |

|

I’m sorry I don’t know the answer to that. All I know is the installation instructions for Windows recommend minGW and cmd.exe or powershell.

… On 23 Oct 2017, at 22:19, Felix Geisendörfer ***@***.***> wrote:

@davecheney it's a bit tricky, because this machine is in a factory in china and I don't have physical access to it. But I'll try to have somebody on-site execute the example program above using cmd.exe as soon as possible.

That being said, I'm curious, how is executing a Go program under cygwin is different from executing it in cmd.exe? The Go program is compiled under Linux (not in cygwin). The only reason we're using cygwin is because it's easier for us to manage machines running sshd under cygwin, than managing windows machines directly.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

|

|

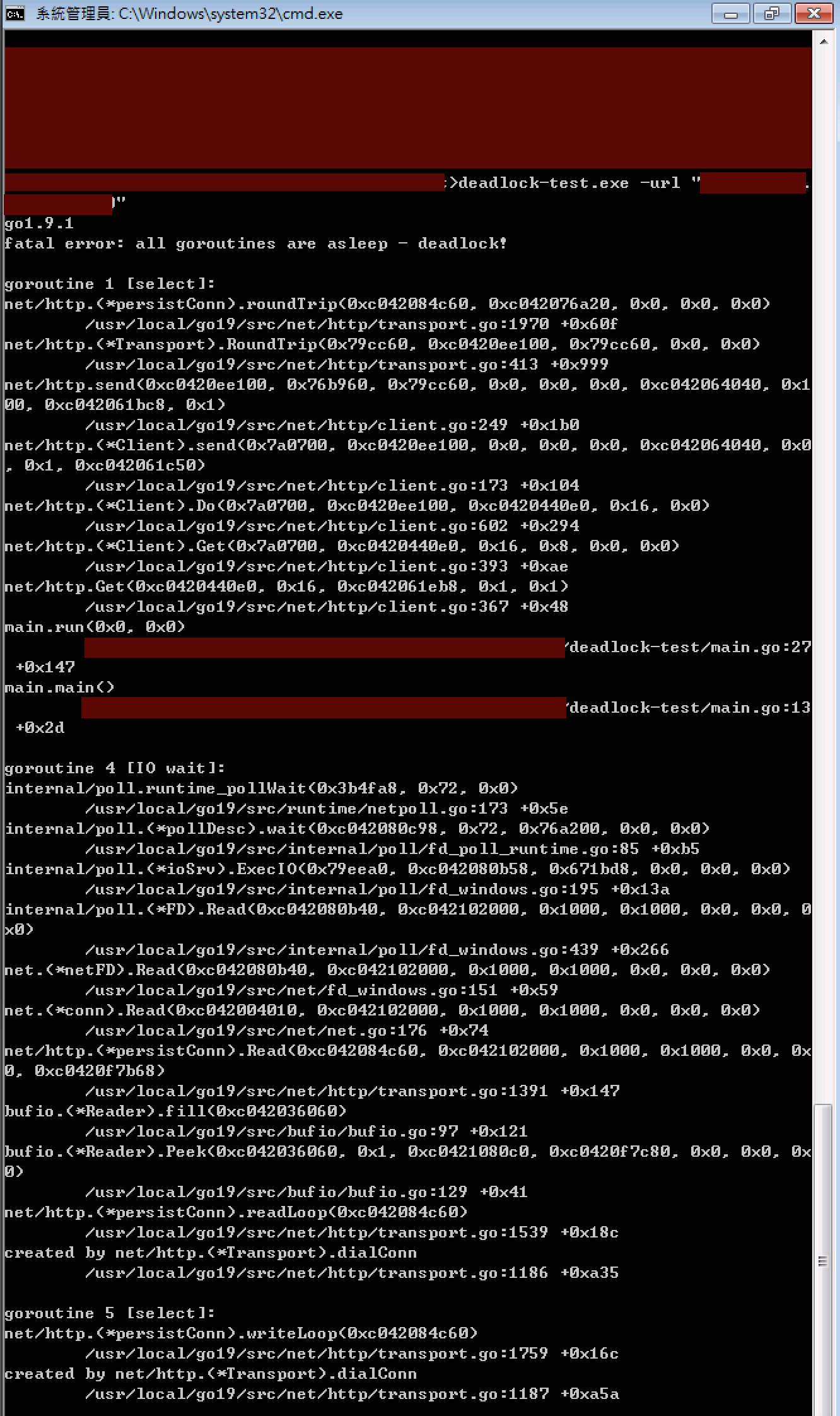

@davecheney I was able to get remote desktop access to the machine and was able to reproduce the deadlock panic using the reproduction program from this issue, as well as by running the httputil test from #20362 . So I think cygwin related trouble can be ruled out. Edit: Also managed to get a screenshot: |

|

I think this is likely a dup of the underlying problem in #20362, but it's OK to keep discussing it here until we are sure. Thanks for the very short reproduction program and for checking it against Go 1.7 and Go 1.8. Because this is new in Go 1.9 my first guess would be that the internal/poll changes introduced in Go 1.9 are not quite right on Windows. We've already found problems with them, which are slated to be fixed in Go 1.9.2. The interesting question is whether the fixes we have queued also address your issue, or else there are more issues lurking. The stack trace makes it look like package net is waiting on network I/O (goroutine 4), but that fact has not been communicated properly to the runtime, which sees all goroutines asleep and exits. Can you please try your test with Go 1.9.2rc2 (#22277 (comment)) and see if the problem is fixed there or perhaps changes in some way? Also, could you please in your environment before running the test? That will give us stack traces for the system goroutines as well, in particular the network poller (if any). |

|

We're just hit this issue, I can confirm it affects non-cygwin builds and I've reproduced it with a slightly amended reproduction case. package main

import (

"flag"

"io/ioutil"

"log"

"net/http"

"os"

"runtime"

)

func main() {

var (

url = flag.String("url", "", "URL to upload to")

attempts = flag.Int("attempts", 1, "max attempts")

)

log.Printf("%s\n", runtime.Version())

flag.Parse()

if err := run(*url, *attempts); err != nil {

log.Println(os.Stderr, err)

os.Exit(1)

}

}

func run(url string, attempts int) (err error) {

for i := 0; i < attempts; i++ {

res, err2 := http.Get(url)

if err2 != nil {

log.Println(os.Stderr, err2)

err = err2

continue

}

defer res.Body.Close()

resData, err2 := ioutil.ReadAll(res.Body)

if err != nil {

log.Println(os.Stderr, err2)

err = err2

continue

}

log.Printf("%d %s\n", res.StatusCode, res.Status)

log.Printf("%s\n", resData)

}

return err

}While it doesn't happen every time its easy to reproduce, usually under 10 runs. This is the output from a run with After the initial In our app after we saw this error during processing on a startup API call, then everything stopped working, not just the client but also the web server wired up to debug pprof also refused to respond preventing us from obtaining a stack trace. I'm wondering if its related to the net polling fix: bf0f692 This currently seems to be Windows specific, which in our case is Just in case this is relevant the test binary was generated using cross compile on FreeBSD. |

|

Testing go1.9.1 with bf0f692 reverted still exhibits the same behaviour unfortunately :( |

|

/cc @jessfraz @bketelsen

Are either of you able reproduce the issue (inside or outside Cygwin)?

… On 23 Oct 2017, at 22:28, Dave Cheney ***@***.***> wrote:

I’m sorry I don’t know the answer to that. All I know is the installation instructions for Windows recommend minGW and cmd.exe or powershell.

> On 23 Oct 2017, at 22:19, Felix Geisendörfer ***@***.***> wrote:

>

> @davecheney it's a bit tricky, because this machine is in a factory in china and I don't have physical access to it. But I'll try to have somebody on-site execute the example program above using cmd.exe as soon as possible.

>

> That being said, I'm curious, how is executing a Go program under cygwin is different from executing it in cmd.exe? The Go program is compiled under Linux (not in cygwin). The only reason we're using cygwin is because it's easier for us to manage machines running sshd under cygwin, than managing windows machines directly.

>

> —

> You are receiving this because you were mentioned.

> Reply to this email directly, view it on GitHub, or mute the thread.

>

|

|

It looks like e4371fb may contain a error with the conversion of the go runtime.nanotime to asm, specifically it subtracts startNano here: This looks like it might be cut and paste error from monotonic component of time.now aka time_now. The following fixes the hangs for me on windows amd64, although I'm still not clear why newer versions of Windows don't seem to be affected by this. diff --git a/src/runtime/sys_windows_amd64.s b/src/runtime/sys_windows_amd64.s

index 744e78c708..fd26dfe75f 100644

--- a/src/runtime/sys_windows_amd64.s

+++ b/src/runtime/sys_windows_amd64.s

@@ -486,7 +486,6 @@ loop:

SHLQ $32, CX

ORQ BX, CX

IMULQ $100, CX

- SUBQ runtime·startNano(SB), CX

MOVQ CX, ret+0(FP)

RET

useQPC: |

|

While this seems to fix the issue, its now clear that this change was intended as its explicitly mentioned, so more digging required. |

|

Just found an interesting difference between a machine which seems to work and one which doesn't, namely the monotonic time is always zero when running just the MonotonicString test on the broken machine. time.exe -test.v -test.run MonotonicString

=== RUN TestMonotonicString

--- PASS: TestMonotonicString (0.00s)

mono_test.go:250: Now() = 2017-10-24 15:20:05.1025971 -0700 PDT m=+0.000000000

PASSWorking Machine time.exe -test.v -test.run MonotonicString

=== RUN TestMonotonicString

--- PASS: TestMonotonicString (0.00s)

mono_test.go:250: Now() = 2017-10-24 15:20:02.3098048 -0700 PDT m=+0.001954200

PASSFor reference the time.exe was built using a go 1.9.1 on the working machine and transferred the broken machine. Update Its on't zero when running just the MonotonicString test. |

|

Thanks for looking into this. If the monotonic time is always zero, that is clearly a problem. Can you see if https://golang.org/cl/73230 fixes this? |

|

Change https://golang.org/cl/73230 mentions this issue: |

|

Never mind, that change clearly didn't work, though I don't know why. |

|

To clarify, its not always zero but it does appear to be always zero when running that single test, which is quite different from other versions, which when running the same test has never been zero in my test runs. I've not ruled out hardware differences causing this behaviour change yet. I'm continuing to test but with the change in nanotime() from absolute to relative I'm concerned we could be seeing a number of issues due to cases where zero was treated as a special case e.g. not yet intialised, which can now happen during early initialisation as well. |

I suspect you did not initialize qpcStartCounter and qpcMultiplier that are used by nowQPC and nanotimeQPC. But I would like to understand the problem before we change the code to automatically use nowQPC and nanotimeQPC. Alex |

|

@alexbrainman the summary of the facts I've found so far:

|

|

Thank you @stevenh for the update. Unfortunately I don't have any bright ideas. It would be nice if I could reproduce it here. Maybe I will try and find PC similar to yours. You PC is real PC or VM?

The TestMonotonicString calls time.Now at the very beginning and then print the value. It is reasonable for monotonic time to be close to 0. Maybe even 0 is OK if monotonic time changes slowly. Maybe change the test to sleep a little before calling time.Now and see what it prints. Also it might be useful to write program that calls time.Now in a loop and see how often monotonic time change, and run it on both good and bad computers. It might give us some clues. Perhaps http.Get assumes that monotonic time increases reasonable quickly, but real monotonic clock is slower than http.Get expects. Alex |

|

I'm still a little confused about why timing matters here. What if you do in main? That will certainly stop the deadlock from being diagnosed, since the program is no longer deadlocked. Does it also give the http.Get time to complete? |

|

I've made some more progress with this; there is definitely something in the runtime which breaks if diff --git a/src/runtime/time.go b/src/runtime/time.go

index abf200d7d3..e87b0fd461 100644

--- a/src/runtime/time.go

+++ b/src/runtime/time.go

@@ -309,4 +309,4 @@ func time_runtimeNano() int64 {

return nanotime()

}

-var startNano int64 = nanotime()

+var startNano int64 = nanotime() - 1The reason this effects Windows 2008 boxes more than newer versions is the default clock resolution on Windows 2008 is 15ms instead of the new 1ms default, so it has a larger window during which nanotime() can return zero. Two other things that prevent it, or at least prevent me from reproducing it, are:

I'm currently working my way though the |

|

I'm OK with that being the fix as long as we think that nanotime does in fact move forward eventually on the machines exhibiting the problem. |

|

Change https://golang.org/cl/73410 mentions this issue: |

|

I sent CL 74310. I'm comfortable with that minimal change being in Go 1.9.2, which is scheduled to go out today. Thanks for identifying such a nice fix. |

|

Moved to Go 1.9.2 but waiting to mark CL as approved for Go 1.9.2 until @ianlancetaylor and @aclements look at it. |

|

My hunch about a consumer of The runtime scheduler What I would like to understand @rsc is why do we want / need Currently this creates a disparity between platforms that use go and those that use asm for |

|

@stevenh I care that monotonic time (what package time gets from runtime.time_now) is in a known limited range, so that comparison is well-defined and we don't have to worry about wraparound. Defining that it starts at process start time is a way to do that. System boot time might also be a way to do that, but not all operating systems make that guarantee. Rather than assume the guarantee or work out which systems do guarantee and which don't, we just define monotonic time as relative to our process start. That also reinforces the point that monotonic times are meaningless outside the current process. The fact that nanotime either does or does not have the process start reference point is definitely confusing. I would not mind if the subtraction of startNano was pushed into the remaining implementations and removed from timestub.go, so that nanotime has process start = -1 on all systems. |

|

@rsc thanks for clarification, makes total sense. It would definitely be good to get all platforms aligned and this specific goal / criteria documented, as not only would it eliminate the confusion, but it would also provide a wider audience for testing which in turn should help prevent issues like this slipping into releases unnoticed. |

I'd be okay with this, too, though I would add two other things:

|

|

@aclements, both those SGTM. Want to open a separate issue for that? This issue will be closed when the fix lands in Go 1.9.2. |

|

Filed #22436. |

|

Per chat with @ianlancetaylor waiting for CL 73410 to pass the build dashboard, and then will approve for Go 1.9.2. |

|

CL 73410 OK for Go 1.9.2. |

|

Change https://golang.org/cl/73491 mentions this issue: |

…with low-res timers Otherwise low-res timers cause problems at call sites that expect to be able to use 0 as meaning "no time set" and therefore expect that nanotime never returns 0 itself. For example, sched.lastpoll == 0 means no last poll. Fixes #22394. Change-Id: Iea28acfddfff6f46bc90f041ec173e0fea591285 Reviewed-on: https://go-review.googlesource.com/73410 Run-TryBot: Russ Cox <rsc@golang.org> TryBot-Result: Gobot Gobot <gobot@golang.org> Reviewed-by: Ian Lance Taylor <iant@golang.org> Reviewed-by: Austin Clements <austin@google.com> Reviewed-on: https://go-review.googlesource.com/73491 TryBot-Result: Russ Cox <rsc@golang.org>

|

go1.9.2 has been packaged and includes: The release is posted at golang.org/dl. — golang.org/x/build/cmd/releasebot, Oct 26 21:09:26 UTC |

|

Thanks to everybody who worked on this for the incredibly fast fix! |

I already reported this issue in #20362 (which might be the same or related), but am opening a new issue as requested by @davecheney .

What version of Go are you using (

go version)?go version go1.9.1 linux/amd64Does this issue reproduce with the latest release?

Yes.

What operating system and processor architecture are you using (

go env)?What did you do?

I was able to reproduce the deadlock with a minimal test program that basically just executes a http GET request.

I ran the command in a loop and got the following results:

go1.8beta2-389-ge4371fb179commit mentioned in net/http/httputil: all goroutines are asleep - deadlock #20362 is probably not the root cause)Notes:

What did you expect to see?

No deadlock.

What did you see instead?

https://gist.github.com/felixge/a2efcaf098e1b8fa0ec1f7d67fb2481c

The text was updated successfully, but these errors were encountered: