-

Notifications

You must be signed in to change notification settings - Fork 17.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

runtime: frequent blocking syscalls cause high sysmon overhead #18236

Comments

|

That looks like a marvel ARMv5 chip, which we're proposing on dropping in

Go 1.9

…On Thu, Dec 8, 2016 at 6:49 AM, Brad Fitzpatrick ***@***.***> wrote:

/cc @minux <https://github.com/minux> @cherrymui

<https://github.com/cherrymui> @davecheney <https://github.com/davecheney>

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#18236 (comment)>, or mute

the thread

<https://github.com/notifications/unsubscribe-auth/AAAcA_qAG040kCGVl0TpdSTSaNv1sYOAks5rFyn5gaJpZM4LHJlV>

.

|

|

It's a Marvell Armada 370, which is ARMv7 |

|

Phew!

…On Thu, Dec 8, 2016 at 7:34 AM, Philip Hofer ***@***.***> wrote:

It's a Marvell Armada 370, which is ARMv7

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#18236 (comment)>, or mute

the thread

<https://github.com/notifications/unsubscribe-auth/AAAcA280K1VdUNc9HYwq-_oJta9rUPfWks5rFzRkgaJpZM4LHJlV>

.

|

|

Yes, please drop ARMv5. I was secretly elated when I saw that was finally happening. There are too many wildly divergent implementations of ARM in the wild. |

|

Yeah, I think timing a few getpid syscalls and adjust sysmon tick

accordingly is reasonable.

|

|

In general, sysmon should back off to a 10ms delay unless it's frequently finding goroutines that are blocked in syscalls for more than 10ms or there are frequent periods where all threads are idle (e.g., waiting on the network or blocked in syscalls). Is that the case in your application?

Perhaps I don't understand your application, but I don't see how these would help. There are two delays involved here: one is the minimum sysmon delay, which is 20us, and the other is the retake delay, which is 10ms. I think both of your suggestions aim at adjusting the retake delay, but it sounds like that's not the problem here. I think the best way to handle the minimum delay is to eliminate it by making sysmon event-driven. The minimum delay is there because sysmon is sampling what is ultimately a stream of instantaneous events. I believe it could instead compute when the next interesting event will occur and just sleep for that long. This is slightly complicated by how we figure out how long a goroutine has been executing or in a syscall, but in this model the minimum delay controls the variance we're willing to accept on that. In practice, that variance can be up to 10ms right now, which would suggest a minimum tick of 10ms if sysmon were event-driven. |

|

@aclements Thanks for the detailed reply. We do, in fact, have all threads blocked in syscalls quite frequently. There's only one P, and the only meaningful thing that this hardware does is move data from the network to the disk and vice-versa. (We spend a good chunk of time in Sorta related: #6817, #7903 (we do already have a sempahore around blocking syscalls) Probably also worth pointing out that this hardware definitely can't hit a 1ms timer deadline reliably under linux, and struggles to meet a 10ms deadline with less than 2ms of overrun. |

|

In case it helps, here's the hack that bought us a perf boost: |

|

Sorry, bumping this again. (But I assume Igneous has been running their own builds for the past two years anyway) |

|

Thanks for the bump @bradfitz. We have opted to put a semaphore in front of disk I/O that keeps things in optimal syscall/thread territory to avoid this issue as opposed to the patch listed above. |

|

Is this still an issue or should we go ahead and close this? |

|

I'm not aware of any runtime re-architecting that would have fixed the

root cause here, so I think it's still an issue. A lot of projects

seem to have independently adopted a semaphore for I/O as a

workaround.

Perhaps the runtime should respect an environment variable `GOMAXM`

that explicitly limits the number of "m"s spawned.

For bleeding-edge Linux systems, there's also `io_uring`, which would

fix the issue altogether.

…On 6/25/19, Austin Clements ***@***.***> wrote:

Is this still an issue or should we go ahead and close this?

--

You are receiving this because you authored the thread.

Reply to this email directly or view it on GitHub:

#18236 (comment)

|

|

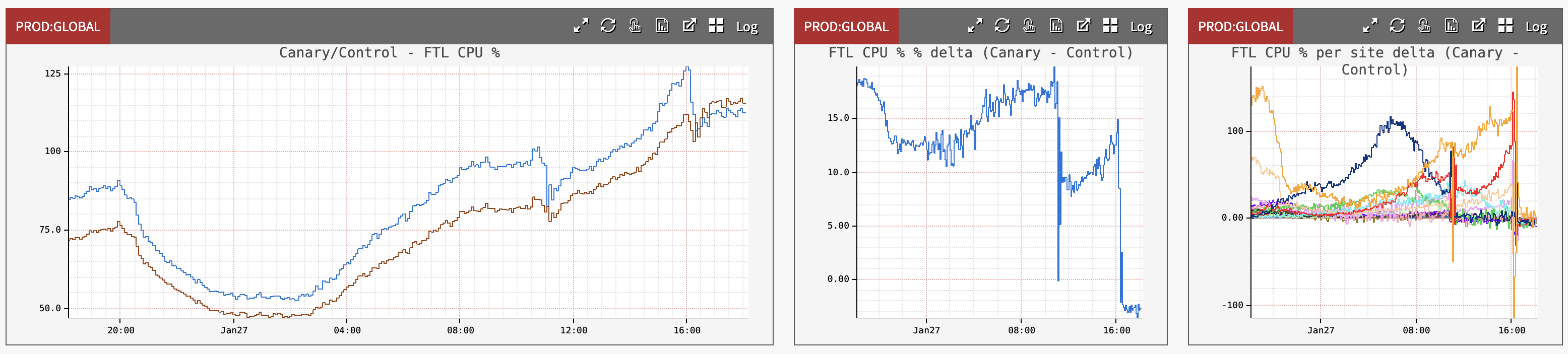

While troubleshooting CPU increase of our HTTP server since Go 1.14 (#41699) I've noticed that The graph below shows canary results:

This seems to indicate that sysmon can significantly increase the overhead, which grows as the server becomes busier. PS: we run on FreeBSD OS. |

|

@agirbal If your program uses many timers and often resets those timers to fire earlier, then the code in the future 1.16 release will be much more efficient than the code in 1.15. Is it possible for you to test your program with the 1.16rc1 release that just came out? Thanks. |

|

@ianlancetaylor ok I just tried 1.16rc1 and the issue seems gone! That is amazing news, we can get back on top of our upgrades, thank you. I have commented further in #41699 . To center back on the sysmon issue, in graph below:

The 4-5% savings is fairly significant for us. Is it acceptable to run with a constant sysmon delay of 10ms, any obvious downsides? Would you consider reducing the sysmon overhead? Thanks! |

|

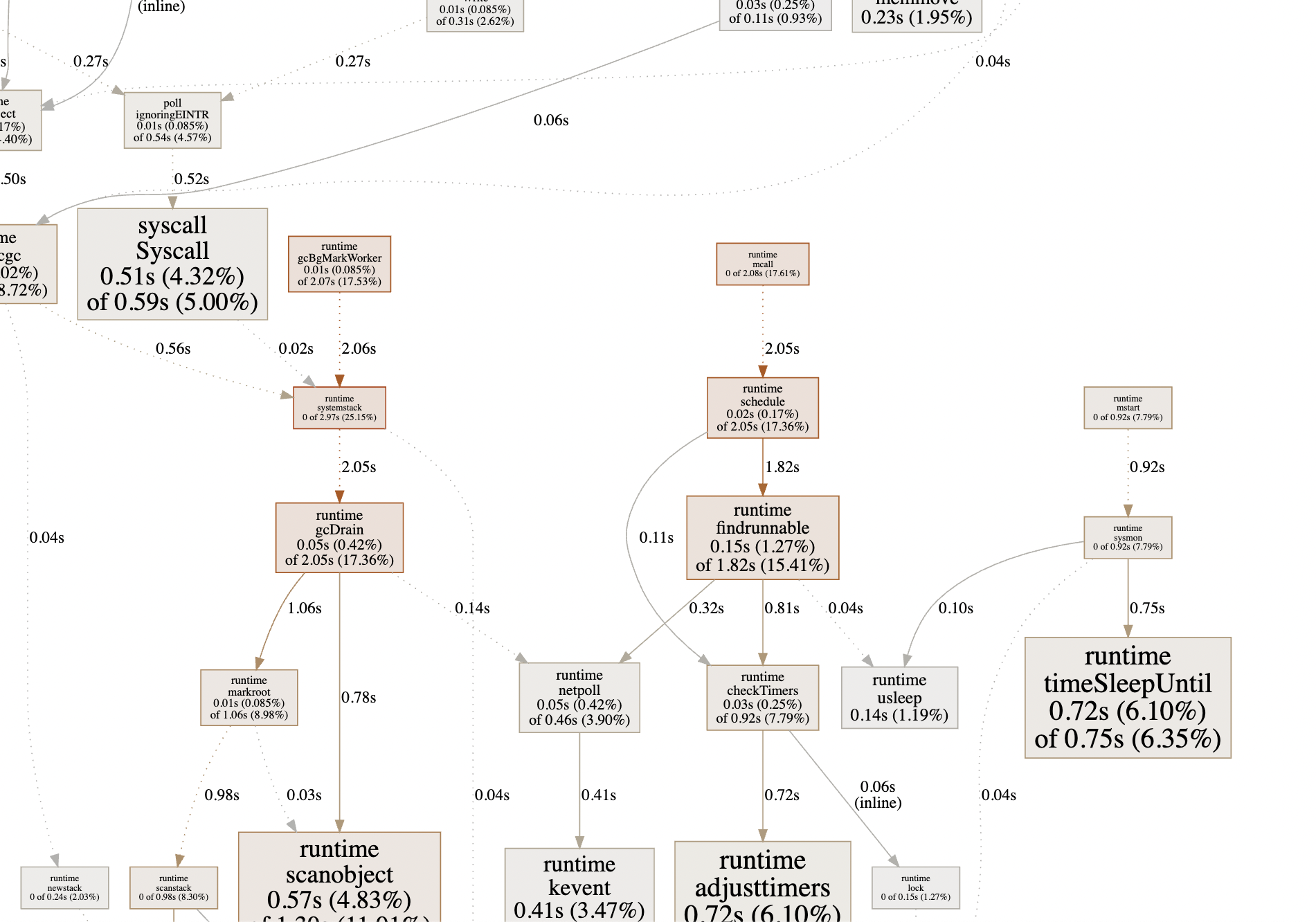

I think this is worth considering. I think that simply changing the delay to 10ms without any other changes may be too aggressive, but perhaps we can do some work to make it less active. One of the major reasons not to extend the delay previously was that sysmon helped with timer expirations. That is gone now in 1.16. The biggest reason is for retaking Ps from blocking syscalls (which occurs after 20us). If we increase the delay to 10ms, then retake will almost never occur. Could you share profiles of what your application looks like now on 1.16, with and without the sysmon reduction? |

|

It occurs to me that we could handle blocking syscalls by having We would still need |

It seems to me like the main cost would be arming the timer. Since a 20us timer is almost certainly the next timer, I think that basically every Edit for the last part: I guess it would need to be any |

|

True, waking the poller doesn't make sense. Having |

The hard-coded 20us

sysmontick in the runtime appears to be far too aggressive for minority architectures.Our storage controllers run a single-core 800MHz arm cpu, which runs single-threaded workloads between 10 and 20 times slower than my desktop Intel machine.

Most of our product-level benchmarks improved substantially (5-10%) when I manually adjusted the minimum tick to 2ms on our arm devices. (Our use-case benefits from particularly long syscall ticks, since we tend to make

readandwritesyscalls with large buffers, and those can take a long time even if they never actually block in I/O. Also, as you might imagine, the relative cost of thread creation and destruction on this system is quite high.)Perhaps the runtime should calibrate the sysmon tick based on the performance of a handful of trivial syscalls (

getpid(), etc). Similarly, the tick could be adjusted upwards if anmwakes up within a millisecond (or so) of being kicked off itsp. Perhaps someone with a better intuition for the runtime can suggest something heuristically less messy.Thanks,

Phil

The text was updated successfully, but these errors were encountered: